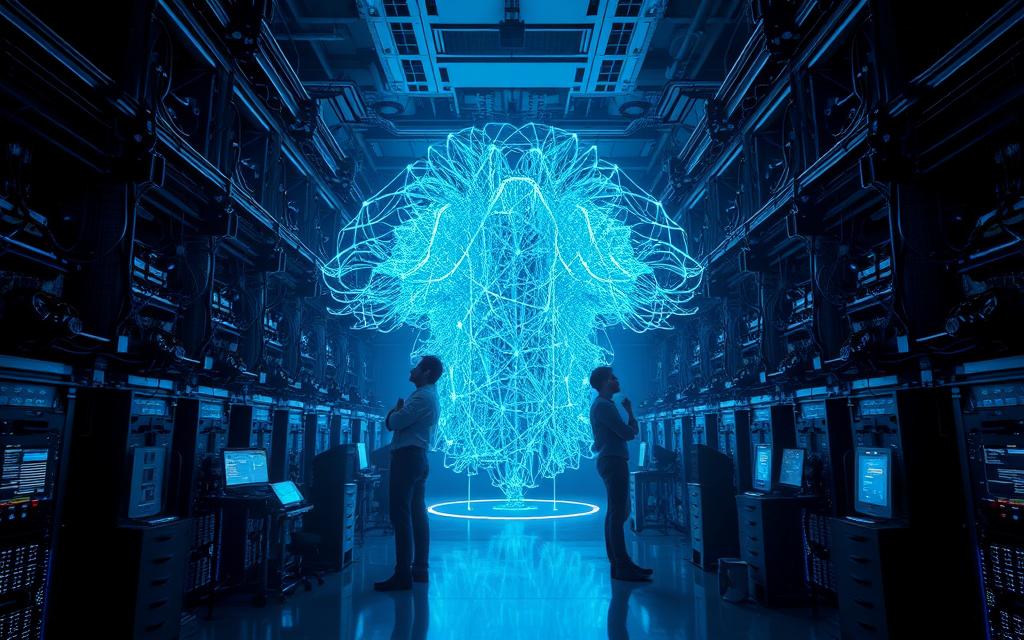

Modern artificial intelligence relies on computational frameworks that mirror the human brain’s structure. These systems, known as neural networks, process information through interconnected layers, enabling machines to recognise patterns in vast data sets. Their ability to deliver precise outcomes has made them central to advancements in machine learning and AI development.

Traditional data analysis methods often struggle with complexity and scale. By contrast, neural networks automate tasks that once demanded extensive human input. This shift allows professionals to focus on strategic decisions rather than manual computations.

The evolution of these systems reflects broader changes in data science. Organisations now prioritise AI-driven solutions for their speed and accuracy. As a result, understanding how to evaluate and refine these models has become a critical skill for practitioners.

This section explores why neural network analysis matters in today’s tech landscape. We’ll examine their role in transforming raw data into actionable insights and prepare readers for deeper technical discussions in subsequent sections.

Introduction to Neural Networks and Their Role in AI

The backbone of modern AI systems consists of interconnected units mirroring neurological processes. These computational frameworks process information through layered architectures, enabling machines to interpret complex patterns. Their design principles borrow heavily from biological systems, creating a bridge between computer science and neuroscience.

Core Operational Principles

Artificial neurons form the building blocks of these systems. Each unit receives inputs, applies mathematical functions, and transmits outputs to connected nodes. This mimics how biological nerve cells exchange electrochemical signals:

| Feature | Biological Networks | Artificial Networks |

|---|---|---|

| Basic Unit | Neurons | Artificial Neurons |

| Signal Transmission | Synaptic Connections | Weighted Edges |

| Learning Mechanism | Neuroplasticity | Backpropagation |

| Processing Speed | Milliseconds | Nanoseconds |

Biological Inspiration in Design

Like the human brain, artificial networks strengthen frequently used connections. This adaptive quality allows both systems to improve performance over time. While simplified compared to organic counterparts, these models capture essential aspects of cognitive processing.

Key differences emerge in scalability and precision. Biological systems excel in energy efficiency, while computational versions handle repetitive tasks with unwavering accuracy. This synergy continues to drive innovations across machine learning applications.

How to analyse neural networks?

Effective evaluation of AI systems begins with understanding their layered architecture. Three core components handle information processing: input, hidden, and output layers. Each plays distinct roles in transforming raw data into usable insights.

Understanding Input, Hidden and Output Layers

The input layer acts as the system’s reception desk. It collects information from sources like spreadsheets or APIs without altering it. This initial stage ensures data enters the network in standardised formats for consistent processing.

Hidden layers form the computational engine. These intermediate stages apply mathematical operations to detect patterns and relationships. Through successive transformations, they convert basic inputs into abstract representations essential for decision-making.

Final results emerge through the output layer. This component translates processed data into actionable predictions or classifications. Its structure varies based on task requirements – binary outputs for yes/no decisions or multiple nodes for complex categorisation.

Real-World Examples in Pattern Recognition

Facial verification systems demonstrate layered processing in action. Input layers receive pixel data from cameras, while hidden layers identify edges and textures. The output layer compares these features against stored profiles to authenticate identities.

Retail recommendation engines use similar principles. They process customer behaviour data through hidden layers to detect purchasing patterns. Output layers then suggest products matching individual preferences, driving engagement through personalised offers.

Exploring Neural Network Architectures and Components

The power of artificial intelligence systems stems from carefully engineered components working in harmony. These elements transform raw data into meaningful outputs through mathematical precision. Each plays a distinct role in pattern recognition and decision-making processes.

Key Elements: Neurons, Weight and Activation Functions

Artificial neurons act as decision-making units within computational frameworks. They receive multiple inputs, apply weight adjustments, and pass results to connected nodes. This mimics biological signal transmission while enabling scalable problem-solving.

Weights determine which features influence outcomes most significantly. During training, these values adjust through scalar multiplication – prioritising impactful data patterns. Higher weights amplify relevant signals, while lower ones suppress noise.

| Component | Role | Example |

|---|---|---|

| Input | Raw data reception | Pixel values in images |

| Weight | Feature prioritisation | 0.87 for eye shape in facial recognition |

| Activation Function | Non-linear transformation | ReLU, Sigmoid |

| Bias | Output adjustment | +0.5 offset in classification |

Activation functions introduce essential complexity into linear calculations. Options like sigmoid or ReLU determine whether neurons fire signals forward. This enables systems to model intricate relationships between variables effectively.

Tools and Techniques for Effective Neural Network Analysis

Quality outcomes in machine learning hinge on structured preparatory work. Before deploying computational systems, practitioners must address two critical phases: data readiness and architectural suitability. These elements form the scaffolding for reliable pattern recognition and decision-making capabilities.

Data Preparation and Model Selection

Curating a robust dataset demands meticulous attention. Sources must represent real-world scenarios while avoiding skewed distributions. Techniques like normalisation and outlier removal standardise inputs, ensuring consistent processing across layers.

Choosing an appropriate framework depends on data characteristics. For text-based tasks, recurrent architectures excel at sequential analysis. Image classification benefits from convolutional structures that prioritise spatial relationships. Matching the model to the problem type reduces training time and improves accuracy.

Training Neurons and Weight Calibration Strategies

Weight adjustments dictate which features influence outcomes. Initial values are randomised, then refined through backpropagation. Regularisation methods like dropout prevent overfitting by deactivating random neurons during iterations.

Validation splits help assess generalisation potential. Analysts typically reserve 20-30% of data for testing model performance. This practice identifies memorisation tendencies early, allowing corrective adjustments before final deployment.

Practical Guide to Using Neural Networks in Data Analysis

Implementing computational models in analytical workflows demands careful planning. Professionals must align architectural choices with specific data characteristics to achieve reliable outcomes. This approach ensures systems deliver actionable insights across diverse business scenarios.

Step-by-Step Process for Model Deployment

Begin by matching data types to suitable frameworks. Text-based tasks like sentiment analysis require recurrent architectures, while image classification benefits from convolutional structures. This strategic pairing optimises processing efficiency from the outset.

| Data Type | Optimal Architecture | Common Use Cases |

|---|---|---|

| Textual | RNN/LSTM | Sentiment analysis, translation |

| Tabular | Multilayer Perceptron | Credit scoring, sales forecasts |

| Graph | Graph Neural Network | Social network mapping |

| Visual | Convolutional Network | Medical imaging, object detection |

Post-deployment monitoring proves crucial for sustained performance. Establish metrics tracking prediction accuracy and processing time. Regular model retraining with updated data sets maintains relevance amidst evolving business needs.

Scalability planning prevents bottlenecks during expansion. Cloud-based solutions offer flexible compute resources for growing analysis requirements. Document workflows thoroughly to enable seamless team collaboration across deployment phases.

Applications of Neural Networks Across Industries

Cutting-edge computational systems now drive innovation far beyond research labs. From hospital wards to stock exchanges, these technologies reshape operational landscapes through pattern recognition and adaptive learning.

Revolutionising Diagnostics and Financial Security

Medical teams harness neural networks to interpret complex images with unprecedented accuracy. Systems trained on vast dataset collections detect tumours in X-rays 30% faster than human radiologists.

“These tools don’t replace clinicians – they amplify diagnostic precision,”

notes a Cambridge University AI researcher.

Financial institutions combat fraud through real-time transaction monitoring. Machine learning models identify suspicious relationships between account activities, flagging 92% of fraudulent cases before funds leave accounts. This approach reduces false positives by 40% compared to traditional rule-based systems.

Retail giants deploy these systems to decode consumer behaviour. By analysing browsing patterns and purchase histories, algorithms generate prediction models that boost conversion rates by 18%. Personalised recommendations now account for 35% of Amazon’s annual revenue.

Manufacturing sectors benefit through predictive maintenance solutions. Sensors feed equipment performance data into neural networks, forecasting machinery failures with 89% accuracy. This proactive approach slashes downtime costs by £230,000 annually for average UK factories.

Advanced Deep Learning Strategies and Optimisation Techniques

Breakthroughs in computational architectures have redefined what’s achievable in pattern recognition. Two innovations stand out for overcoming historical limitations: residual networks and memory-enhanced frameworks. These approaches tackle fundamental challenges in training sophisticated models.

Implementing ResNets and Skip Connections

Traditional deep learning models often stalled at 20-30 layers due to vanishing gradients. Residual networks (ResNets) introduced skip connections that bypass multiple layers, preserving signal strength. This architectural tweak enables training of systems with over 1,000 layers while maintaining stability.

| Component | Function | Impact |

|---|---|---|

| Skip Connection | Bypass layer blocks | Prevents gradient decay |

| Residual Block | Learn feature differences | Accelerates convergence |

| Identity Mapping | Maintain input integrity | Simplifies optimisation |

“ResNets transformed our ability to handle hierarchical data patterns that were previously computationally prohibitive,”

observes a machine learning specialist at Imperial College London.

Leveraging RNNs and LSTMs for Time Series Analysis

Recurrent neural architectures excel with sequential data like stock prices or sensor readings. Long Short-Term Memory (LSTM) units enhance these frameworks through gated memory cells. Three specialised components manage information flow:

- Forget gates discard irrelevant historical data

- Input gates evaluate new information importance

- Output gates control prediction influences

This structure proves vital for applications requiring time-aware decisions. Energy firms use LSTMs to forecast grid demand 72 hours ahead with 94% accuracy. Transport networks apply them to predict congestion patterns during peak hours.

Successful implementation demands careful hyperparameter tuning. Learning rates between 0.001-0.01 typically balance speed with stability. Regularisation techniques like dropout (20-50% rates) prevent overfitting in complex temporal models.

Best Practices for Training and Model Evaluation

Mastering computational systems requires methodical approaches to parameter adjustment and validation. Central to this process is empirical risk minimisation, where models refine their predictions by reducing discrepancies between expected and actual outputs. This principle guides systems towards reliable pattern recognition without memorising training examples.

Gradient-based optimisation drives effective learning cycles. Backpropagation algorithms adjust layer weights by calculating error derivatives across interconnected nodes. Modern variants like Adam optimisers adapt learning rates dynamically, accelerating convergence while maintaining stability.

Validation strategies prevent misleading performance claims. Key techniques include:

- K-fold cross-validation for robust accuracy estimates

- Stratified sampling to preserve data distributions

- Time-based splits for temporal patterns

| Metric | Use Case | Threshold |

|---|---|---|

| Precision | Fraud detection | >0.85 |

| Recall | Medical diagnosis | >0.90 |

| F1 Score | Class imbalance | >0.80 |

Detecting overfitting demands vigilance. Analysts monitor validation loss curves for divergence from training metrics. Regularisation methods like dropout (deactivating 20-30% of inputs randomly) prove particularly effective for complex architectures.

“Proper evaluation isn’t about perfect scores – it’s about understanding where and why models fail,”

Batch normalisation standardises data flows between layers, reducing internal covariate shift. This technique often improves training speed by 30% while enhancing generalisation across diverse datasets.

Conclusion

Neural networks have evolved into indispensable tools for uncovering hidden relationships in complex datasets. Their ability to process visual, textual, and numerical information revolutionises decision-making across sectors. From medical image classification to financial forecasting, these systems transform raw inputs into actionable predictions with remarkable precision.

Organisations leverage different network architectures based on specific challenges. Convolutional models excel in analysing medical scans, while recurrent types decode time-sensitive patterns in market trends. This adaptability makes them vital for tasks requiring rapid processing of multi-layered data.

The true power of these systems lies in their continuous learning capabilities. As they encounter new inputs, they refine their predictive accuracy – a feature particularly valuable in dynamic fields like e-commerce and cybersecurity. Proper implementation requires matching model types to dataset characteristics and business objectives.

With ongoing advancements in computational memory and processing efficiency, neural networks will remain central to AI-driven innovation. Their capacity to handle intricate tasks positions them as essential tools for organisations aiming to maintain competitive advantage in data-centric environments.

FAQ

What distinguishes neural networks from traditional algorithms?

Neural networks learn directly from raw data through interconnected layers, adjusting weights during training. Unlike rule-based systems, they autonomously identify patterns in complex datasets like images or time-series data.

How do hidden layers enhance model performance?

Hidden layers process input data through non-linear transformations, enabling networks to capture intricate relationships. Deeper architectures, such as ResNets, improve feature extraction for tasks like image classification.

Why are activation functions critical in these systems?

Activation functions introduce non-linearity, allowing networks to model complex behaviours. Functions like ReLU or sigmoid determine neuron outputs, directly influencing learning and prediction accuracy.

Which industries benefit most from recurrent neural networks?

Industries like finance use RNNs and LSTMs for time-series forecasting, while healthcare applies them to patient monitoring. Their memory retention suits sequential data analysis.

What steps ensure effective data preparation?

Normalisation, handling missing values, and augmenting datasets improve model robustness. Techniques like cross-validation reduce overfitting during training phases.

How do convolutional neural networks process visual information?

CNNs use filters to detect spatial hierarchies in images, such as edges or textures. Pooling layers downsample features, enhancing efficiency in tasks like object recognition.

What role do weights play in model calibration?

Weights adjust connection strengths between neurons during backpropagation. Optimisation algorithms like Adam fine-tune these parameters to minimise prediction errors.

Can neural networks replicate human brain functions?

While inspired by biological neurons, artificial networks simplify brain processes. They excel in pattern recognition but lack the adaptability and contextual understanding of human cognition.

What are common challenges in deploying deep learning models?

Computational demands, overfitting on small datasets, and interpretability issues arise. Strategies like transfer learning or dropout layers mitigate these risks during deployment.

How do skip connections improve training efficiency?

Skip connections in architectures like ResNets allow gradients to bypass layers, preventing vanishing issues. This enables deeper networks without sacrificing convergence speed.