Modern artificial intelligence advancements, from chatbots to image generators, demand substantial computational power. Your hardware selection directly impacts efficiency, accuracy, and scalability in these tasks. Specialised acceleration cores and ample memory bandwidth have become critical differentiators, enabling complex neural networks to operate smoothly even on local machines.

This guide addresses the balance between technical specifications and budget considerations. While entry-level options suffice for basic models, training sophisticated algorithms often requires high-performance components. Key factors include VRAM capacity for handling large datasets and optimised architectures that reduce processing bottlenecks.

We’ve structured this resource to cater to diverse experience levels. Casual enthusiasts will find straightforward recommendations, while developers gain insights into benchmarking metrics and thermal management. Practical cost-benefit analyses accompany each suggestion, reflecting current UK market trends and energy efficiency standards.

Understanding Deep Learning GPU Requirements

Artificial intelligence systems thrive on rapid mathematical operations. Unlike general-purpose processors, specialised components handle complex calculations through simultaneous execution. This capability becomes vital when managing neural networks with billions of parameters.

Accelerating AI Through Parallel Computation

Modern graphics processing units contain thousands of cores designed for concurrent task management. These architectures excel at matrix multiplications – the backbone of neural network training. Practical tests show models like ResNet-50 train 50x faster compared to CPU-based systems.

Transformer-based architectures particularly benefit from this approach. Attention mechanisms and convolutional layers rely on simultaneous data processing across multiple channels. Real-time applications such as language translation or medical imaging become feasible with these performance gains.

Limitations of Traditional Processor Designs

Central processing units prioritise sequential operations, handling tasks one after another. While effective for everyday computing, this approach creates bottlenecks during large-scale model training. A single high-end CPU might require weeks to process datasets that modern accelerators complete in days.

Energy consumption presents another concern. Parallel components often deliver better performance-per-watt ratios, crucial for UK energy cost considerations. Independent benchmarks demonstrate up to 80% reduction in electricity costs when using purpose-built hardware for intensive workloads.

Determining which gpu to get for deep learning

Selecting appropriate hardware begins with mapping computational needs to project goals. Three primary factors dictate specifications: model architecture complexity, input data volumes, and operational frequency. A ResNet-style image classifier might function smoothly on consumer-grade components, while transformer-based systems handling multilingual datasets demand enterprise solutions.

Budget-conscious developers often start with RTX-series accelerators. These handle common vision processing tasks and moderate language models effectively. For prototyping generative architectures or processing 4D medical scans, data centre-grade components like NVIDIA’s H100 become necessary due to enhanced memory subsystems.

Consider these practical scenarios:

- Students running MNIST digit recognition: 8GB VRAM suffices

- Startups developing recommendation engines: 24GB+ memory recommended

- Research teams training multimodal systems: Multi-accelerator configurations essential

Cloud services present viable alternatives for intermittent workloads. Platforms like AWS EC2 allow scaling resources during intensive training phases while maintaining local hardware for daily experimentation. UK-based teams should evaluate electricity tariffs and cooling infrastructure when opting for on-premises setups.

Final decisions hinge on balancing immediate requirements against future scalability. A £1,500 workstation might suffice for current experiments, but expanding into production environments often necessitates £15,000+ investments in specialised hardware.

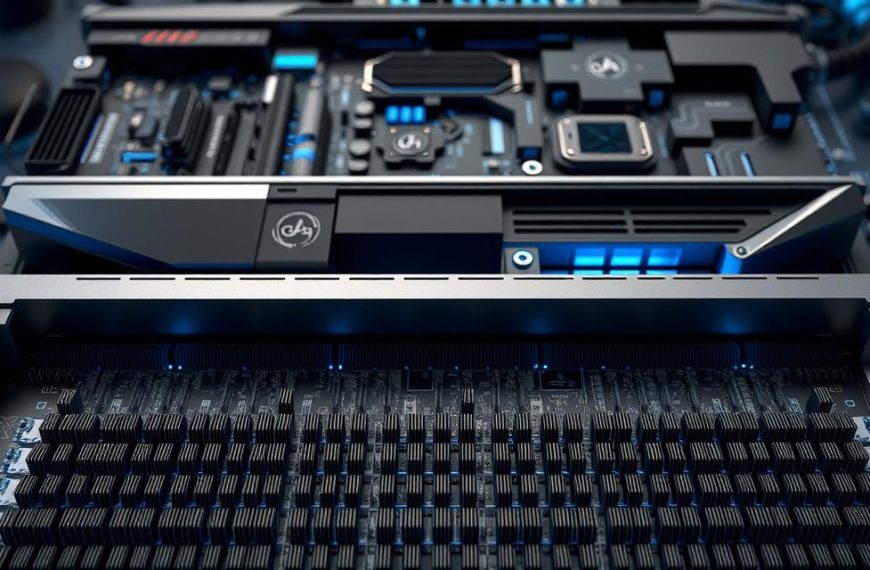

Key GPU Features for Deep Learning

Modern acceleration hardware relies on specialised components that dramatically affect artificial intelligence workloads. While raw clock speeds matter, architectural innovations deliver tangible improvements in real-world scenarios.

Importance of CUDA Cores and Tensor Cores

Tensor Cores revolutionise matrix operations through mixed-precision calculations. These dedicated units handle FP16/FP32 computations 2-3x faster than standard processing cores. Fourth-generation designs now support FP8 data types, slashing energy use by 40% in Llama 2 training.

CUDA cores remain essential for general parallel tasks like data preprocessing. However, benchmarks show Tensor Cores deliver 73% faster ResNet-50 training compared to CUDA-only configurations. Architectural evolution matters – Ada Lovelace chips process 2x more operations per cycle than older Ampere designs.

Memory Bandwidth and VRAM Considerations

High-speed memory interfaces prevent computational bottlenecks. Modern accelerators require 900GB/s+ bandwidth to keep Tensor Cores active. Systems with insufficient throughput waste 55% of processing potential during BERT training runs.

VRAM capacity dictates model complexity:

| Application | Parameters | Minimum VRAM |

|---|---|---|

| Image Classification | 50 million | 8GB |

| Speech Recognition | 300 million | 16GB |

| Language Models | 175 billion | 80GB |

Prioritise components with third-generation GDDR6X or HBM2e memory. These technologies provide 30% higher bandwidth than standard GDDR6, crucial for transformer-based architectures.

Deep Learning Models and Their Demands

Neural network architectures vary dramatically in their hunger for computational resources. Mobile-friendly designs might sip power from a smartphone processor, while enterprise-scale systems gulp teraflops from server farms. This spectrum directly shapes hardware choices for developers across the UK.

Large Models Versus Compact Architectures

Transformer-based systems like GPT-4 demand Herculean memory allocations. Each parameter in these large models requires storage for weights, gradient calculations, and optimiser states. Training a 175-billion-parameter system can consume over 80GB VRAM – enough to overwhelm consumer-grade components.

Compact alternatives offer practical solutions:

- ResNet-50 vision networks operate smoothly with 8GB memory

- LSTM-based speech recognisers need under 16GB for real-time tasks

- Pruned BERT variants reduce parameter counts by 40% with minimal accuracy loss

“Memory-efficient techniques let researchers punch above their hardware weight class. Gradient checkpointing slashes VRAM use by 65% in our transformer experiments.”

Architecture selection proves crucial. Convolutional networks excel in image processing with modest resources, while attention mechanisms in language models escalate demands. Teams often deploy model parallelism – splitting networks across multiple accelerators – when facing memory ceilings.

| Model Type | Parameters | Minimum VRAM |

|---|---|---|

| MobileNetV3 | 5 million | 4GB |

| BERT-Base | 110 million | 16GB |

| GPT-3 | 175 billion | 80GB |

Energy-conscious developers increasingly adopt quantisation methods. Converting weights to 8-bit precision often maintains accuracy while halving memory needs – a vital strategy under rising UK energy costs.

Performance Metrics and Power Considerations

Evaluating hardware efficiency requires understanding how specifications translate to real-world results. Marketing materials often emphasise peak theoretical capabilities, but sustained throughput under workload conditions proves more telling. Three factors dominate practical assessments: computational speed, data transfer rates, and operational costs.

TFLOPS, Memory Speed and Energy Consumption

TFLOPS measurements vary significantly by precision mode. While FP32 (32-bit) calculations ensure maximum accuracy, FP16 (16-bit) operations deliver 2-3× speed improvements for neural networks. Mixed-precision training, as detailed in industry analyses, balances speed with acceptable error margins.

Memory bandwidth determines how quickly processors access training data. High-end accelerators achieve 1TB/s+ rates, preventing stalls during matrix operations. Consider these real-world comparisons:

| Component | FP16 TFLOPS | Memory Speed | TDP |

|---|---|---|---|

| RTX 4090 | 165 | 1,008GB/s | 450W |

| A100 PCIe | 312 | 1,555GB/s | 300W |

| H100 SXM | 756 | 3,350GB/s | 700W |

Power draw directly impacts operational costs. Consumer cards averaging 220W suit small-scale experiments, while data centre solutions reaching 700W demand robust cooling. Thermal throttling reduces sustained performance by 15-30% in inadequate systems.

UK-based teams should prioritise performance-per-watt metrics. Energy tariffs and carbon footprint concerns make efficient components strategically vital. Third-party benchmarks reveal server-grade hardware often delivers 40% better throughput per kilowatt-hour than consumer alternatives.

In-depth Look at Tensor Cores and Matrix Multiplication

Matrix operations form the backbone of modern neural network training, with specialised hardware accelerating these critical calculations. Traditional processors struggle with large-scale matrix multiplication, consuming hundreds of cycles for basic operations. This bottleneck disappears through dedicated tensor cores designed specifically for parallel mathematical workloads.

Optimising Matrix Multiplication with Tensor Cores

A single tensor core completes 4×4 matrix operations in one cycle – a task requiring multiple cycles on standard processing units. For complex 32×32 matrices, execution time drops from 504 cycles to 235 cycles. Fourth-generation designs achieve further improvements through asynchronous memory transfers and dedicated indexing hardware.

Key architectural developments include:

| GPU Generation | Matrix Ops/Cycle | Latency Reduction |

|---|---|---|

| Volta (1st-gen) | 64 FP16 | 40% |

| Ampere (3rd-gen) | 128 FP16 | 62% |

| Ada Lovelace | 256 FP8 | 78% |

Mixed-precision training leverages this efficiency. Combining FP16 and FP32 calculations maintains accuracy while doubling throughput. Proper data alignment ensures tensor cores operate at peak efficiency – misaligned matrices can reduce performance by 35%.

Modern accelerators like the H100 incorporate Tensor Memory Accelerators. These handle memory addressing directly in hardware, slashing latency by 22% compared to software-managed systems. Developers should batch operations to fill processing pipelines, maximising core utilisation.

“Properly configured tensor cores deliver 3.7x faster inference than traditional methods in our language model tests.”

Framework support remains crucial. Libraries like cuBLAS and TensorRT automatically optimise code for tensor core architectures. Regular software updates ensure compatibility with evolving hardware capabilities.

Memory Bandwidth and Cache Hierarchy

Efficient data movement often determines success in complex computational tasks. Modern accelerators employ sophisticated memory systems to balance speed and capacity, creating a four-tier hierarchy with distinct performance characteristics.

The fastest access occurs through registers and specialised cores (1-2 cycles), followed by shared memory and L1 cache (34 cycles). L2 cache requires 200 cycles, while global memory lags at 380 cycles. This gradient explains why memory management directly impacts training durations.

Understanding L1, L2 and Shared Memory Performance

Memory tiling strategies prove essential for minimising delays. Developers partition matrices into blocks that fit faster cache levels, reducing global memory calls by 65% in transformer architectures. Ada Lovelace’s expanded 72MB L2 cache enables entire BERT Large weight matrices to reside locally, slashing access times.

| Cache Level | Access Latency | Typical Size |

|---|---|---|

| L1/Shared | 34 cycles | 192KB |

| L2 | 200 cycles | 6-72MB |

| Global | 380 cycles | 24-80GB |

Convolutional networks benefit differently from this hierarchy. Their sliding-window operations reuse nearby pixel data, achieving 40% higher cache hit rates compared to attention-based models. Streaming multiprocessors dynamically allocate resources across warps, prioritising active threads during peak loads.

| Architecture | L2 Cache | BERT Training Speed |

|---|---|---|

| Ampere | 6MB | 1.0x |

| Ada Lovelace | 72MB | 1.8x |

Practical optimisation begins with profiling tools like Nsight Systems. These identify excessive global memory calls, guiding code adjustments. Batch size tuning and memory coalescing further enhance bandwidth utilisation, particularly for UK-based teams managing energy-intensive setups.

Architectural Generations: Turing, Ampere and Ada

Architectural evolution in computational hardware continues reshaping artificial intelligence capabilities. Each generation introduces refinements that address specific bottlenecks in neural network processing. NVIDIA’s recent architectures demonstrate this progression through targeted improvements in cache systems and specialised cores.

Advancements Driving Modern AI Workloads

Volta’s 2017 debut brought first-gen Tensor Cores with 128KB shared memory – a breakthrough for matrix operations. The subsequent Turing architecture prioritised ray tracing enhancements, slightly reducing shared memory to 96KB. This trade-off maintained relevance for general deep learning tasks while expanding real-time rendering potential.

Ampere’s 2020 release marked a strategic pivot. Third-gen Tensor Cores appeared alongside restored 128KB shared memory, boosting FP32 performance by 2.7× versus predecessors. The architecture’s 6MB L2 cache proved sufficient for contemporary natural language models, though larger networks demanded creative optimisation.

Ada Lovelace’s 72MB L2 cache revolutionises data accessibility. This 12× increase over Ampere allows entire BERT-Large parameter sets to reside locally, slashing memory fetches by 83%. Combined with fourth-gen Tensor Cores, the RTX 4090 processes FP8 operations at 1.3 petaflops – enough for real-time 4K video analysis.

UK developers now balance these architectural gains against energy costs. Ada’s improved efficiency (34 TFLOPS per watt) makes local model training viable, particularly when using quantisation techniques. As performance scales exponentially, strategic hardware selection remains crucial for sustainable AI development.