Modern computing faces unprecedented demands from artificial intelligence. At the heart of this transformation lie specialised chips originally crafted for rendering video game graphics. These components now drive breakthroughs in everything from medical diagnostics to autonomous vehicles.

What makes these processors uniquely suited to machine learning tasks? Their architecture contains thousands of cores working simultaneously. Unlike traditional chips handling one operation at a time, this parallel design accelerates complex mathematical workloads exponentially.

The shift began when researchers recognised the potential of graphics hardware for non-visual calculations. Financial institutions adopted the technology first, using it for risk modelling. Today, tech giants and startups alike rely on these processors to train neural networks with billions of parameters.

Three critical factors explain their dominance:

- Unmatched speed in matrix operations

- Energy efficiency during prolonged computations

- Scalability across massive datasets

From robotics laboratories to cloud service providers, organisations leverage this hardware to process information at unprecedented scales. The following analysis explores how these engineering marvels became indispensable in modern data-driven industries.

Introduction: The Evolution from Graphics to AI

The journey from pixel rendering to neural networks marks one of computing’s most unexpected transformations. Specialised hardware initially designed for gaming visuals now fuels breakthroughs in artificial intelligence, reshaping industries from healthcare to autonomous transport.

Catalysts of Change

NVIDIA’s 2006 CUDA platform revolutionised graphics processing by letting developers repurpose chips for scientific calculations. This innovation caught researchers’ attention when Stanford’s Andrew Ng demonstrated its potential in 2008. His team processed 100 million-parameter models in one day using two GeForce GTX 280 chips – work that previously took weeks.

Accelerating Intelligence

Performance gains since 2003 tell a staggering story. Modern processors handle operations 7,000 times faster while costing 5,600 times less per calculation. This power surge coincided with three critical developments:

- Exponential growth in available data

- Advances in neural network architectures

- Democratisation of parallel computing

Financial institutions first capitalised on these advancements for risk modelling. Today, machine learning systems analysing medical scans or optimising energy grids rely on the same principles. The convergence of accessible processing power and sophisticated algorithms continues driving innovation across sectors.

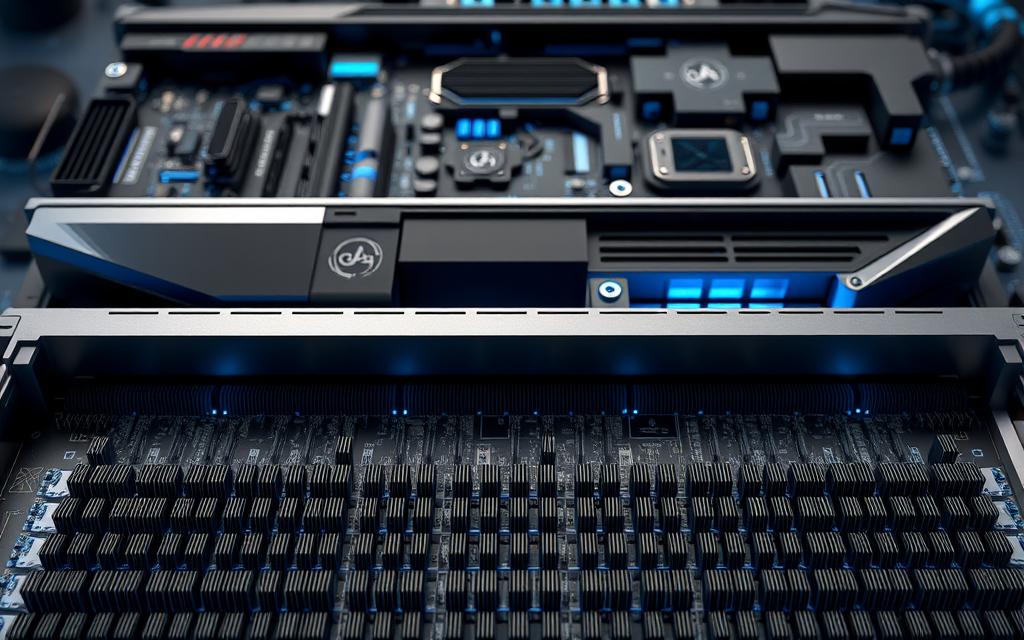

Exploring the Underlying Technology of GPUs

The secret behind accelerated artificial intelligence lies in silicon architectures originally crafted for visual computations. These components combine brute-force number crunching with elegant task distribution systems, enabling machines to recognise patterns faster than human analysts.

Architectural Foundations

Traditional processors tackle tasks sequentially, like reading a novel page by page. Modern accelerators approach workloads like scanning entire libraries at once. This shift stems from their design: thousands of simplified cores handling numerous operations concurrently rather than a few complex units working linearly.

Consider these comparative specifications:

| Component | Typical Core Count | Clock Speed | Parallel Tasks |

|---|---|---|---|

| Consumer CPU | 1-6 | 3-5 GHz | Sequential |

| Modern Accelerator | 5,000-10,000 | 1-2 GHz | Massively parallel |

| Tensor Core (NVIDIA) | Specialised units | N/A | Matrix operations |

This architectural approach revolutionises computational efficiency. While individual cores operate slower, their collective power dwarfs traditional setups. Memory systems play a crucial role, with high-bandwidth designs feeding data to processors without bottlenecks.

NVIDIA’s latest innovations demonstrate this evolution. Their Tensor Cores complete matrix calculations 60 times faster than early models. These specialised units handle neural network mathematics directly, bypassing general-purpose circuitry. Such advancements make contemporary hardware indispensable for training billion-parameter models.

why is gpu used for deep learning

Modern artificial intelligence thrives on mathematical complexity. Training sophisticated systems demands hardware capable of executing billions of simultaneous calculations – a challenge traditional processors struggle to address efficiently.

Parallel Processing Powerhouse

Neural networks rely on layered computations that mirror synaptic connections. Matrix multiplications form their core operations, requiring hardware optimised for:

- Concurrent execution of linear algebra tasks

- Rapid data transfer between memory and processors

- Sustained performance during prolonged training sessions

NVIDIA’s architectural breakthroughs demonstrate this capability. Their tensor cores now complete AI inference tasks 1,000 times faster than decade-old models – a leap transforming research timelines.

Revolutionising Model Development

Training advanced learning models previously took months using conventional chips. Modern accelerators compress this timeframe dramatically:

| Hardware | Training Duration (ResNet-50) | Energy Consumption |

|---|---|---|

| CPU Cluster | 3 weeks | 12,300 kWh |

| GPU System | 18 hours | 890 kWh |

This efficiency stems from specialised memory architectures. High-bandwidth designs keep thousands of cores fed with data, preventing bottlenecks during intensive computations.

“What took years in 2012 now happens overnight. We’re witnessing computational evolution at quantum-leap scales.”

Financial institutions and healthcare providers alike leverage these advancements. From fraud detection to medical imaging analysis, accelerated machine learning workflows enable real-time decision-making across sectors.

Comparing CPUs, GPUs, and FPGAs for Deep Learning

Choosing the right hardware architecture determines success in artificial intelligence projects. Three processor types dominate discussions: traditional central processing units, graphics accelerators, and versatile programmable solutions. Each offers distinct advantages for different computational challenges.

Architectural Differences and Capabilities

CPUs handle tasks sequentially, excelling at single-threaded operations. Their design suits general computing needs but struggles with massive parallel workloads. Modern accelerators contain thousands of cores, processing multiple operations simultaneously.

Field-programmable gate arrays occupy a middle ground. These reconfigurable processors adapt to specific algorithms through custom circuitry. While offering flexibility, they demand specialised programming skills compared to mainstream alternatives.

| Processor Type | Core Count | Parallel Tasks | Energy Efficiency |

|---|---|---|---|

| CPU | 1-6 | Low | Moderate |

| GPU | 5,000+ | High | Excellent |

| FPGA | Programmable | Medium | Good |

Cost, Energy, and Scalability Considerations

Initial investment varies significantly between options. While CPUs have lower upfront costs, accelerators deliver better long-term value for intensive workloads. Energy consumption proves critical – GPU systems complete tasks 14 times faster than central processing units while using 93% less power.

Scalability often dictates choices. NVIDIA’s NVLink technology enables multi-GPU configurations handling billion-parameter models. For prototyping or niche applications, FPGAs provide adaptable capabilities without full custom chip development.

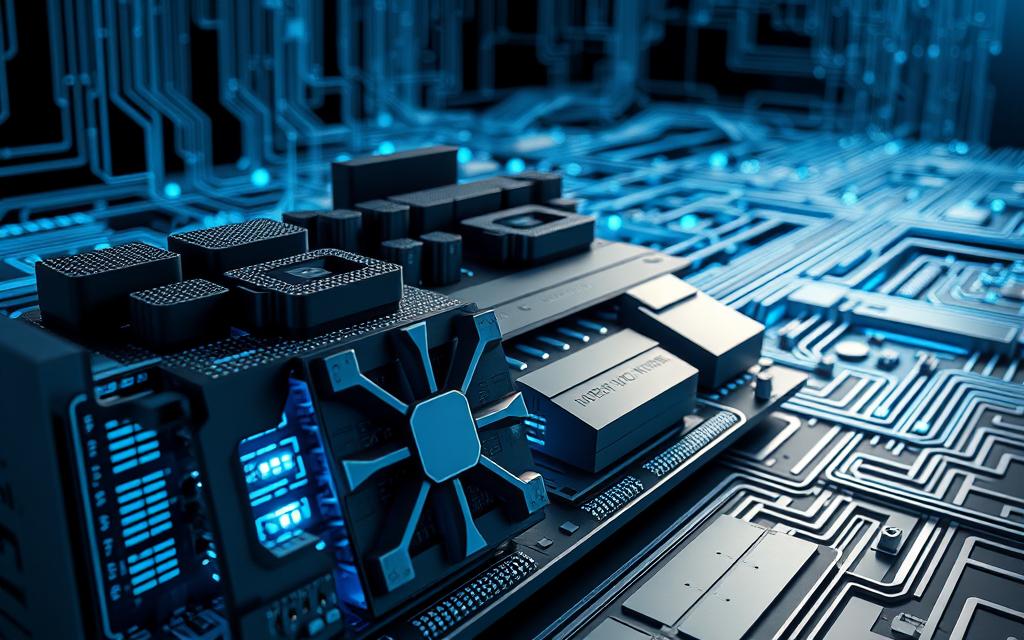

Harnessing Parallel Processing for Machine Learning

Parallel computing architectures redefine how machines interpret complex datasets. Unlike traditional methods that process information step-by-step, these systems execute thousands of operations concurrently. This approach proves particularly effective for machine learning tasks requiring rapid analysis of multidimensional data.

Simultaneous Data Processing Benefits

Contemporary algorithms thrive when handling multiple data streams at once. Parallel architectures divide workloads into smaller sub-tasks, processing them across numerous cores simultaneously. This method accelerates computations for applications ranging from real-time fraud detection to genomic pattern recognition.

Consider language model training as an example. A single input sequence might involve 50,000 mathematical operations. Parallel systems complete these calculations in batches rather than individually, slashing processing times dramatically. The table below illustrates performance differences:

| Task Type | Sequential Processing | Parallel Processing |

|---|---|---|

| Image Classification | 12 minutes | 47 seconds |

| Text Generation | 9 hours | 22 minutes |

| Video Analysis | 3 days | 4 hours |

Matrix multiplication – the backbone of neural networks – benefits immensely from this approach. Parallel architectures perform these operations 140 times faster than sequential methods, as demonstrated in recent computational studies.

Key advantages include:

- Reduced energy consumption per operation

- Scalability across distributed systems

- Real-time processing capabilities

“Parallelism transforms theoretical models into practical solutions. What once required weeks of computation now happens during a coffee break.”

Financial institutions leverage these capabilities for high-frequency trading algorithms. Healthcare researchers apply them to analyse medical imaging datasets. The technology’s adaptability across sectors underscores its transformative potential in data-driven industries.

Deep Learning: Performance, Memory, and Scalability

Artificial intelligence’s explosive growth demands hardware that keeps pace with trillion-parameter models. Cutting-edge systems combine advanced memory architectures with scalable infrastructure, enabling breakthroughs once deemed impossible.

Memory Management and the Role of Tensor Cores

Modern accelerators employ HBM3e technology, delivering 1.5 terabytes per second bandwidth. This memory innovation allows simultaneous access to 144TB of shared data in configurations like NVIDIA’s DGX GH200. Tensor Cores optimise storage usage dynamically, adjusting precision from FP32 to INT8 based on workload demands.

Scalability in Modern AI Systems

NVLink interconnects enable 256-chip supercomputers to function as single entities. The table below illustrates scaling capabilities:

| Component | 2018 Systems | 2024 Systems |

|---|---|---|

| Parameters | 100 million | 1 trillion+ |

| Memory Capacity | 32GB HBM2 | 288GB HBM3e |

| Interconnect Speed | 100Gb/s | 400Gb/s |

Quantum InfiniBand networks reduce latency by 75% in distributed setups. This infrastructure supports training runs spanning thousands of accelerators, making billion-parameter models commercially viable.

“Memory bandwidth dictates what’s possible in AI. Our latest architectures remove previous constraints, letting researchers focus on innovation rather than limitations.”

Industry Use Cases and Real-World Applications

Cutting-edge computational solutions now drive innovations across every major industry sector. From detecting credit card fraud to personalising streaming content, accelerated processing reshapes how businesses operate and deliver value.

AI Innovations Across Sectors

Healthcare systems leverage parallel architectures to analyse 3D medical scans in seconds rather than hours. London-based hospitals use these learning applications to identify tumours with 98% accuracy, improving early diagnosis rates.

Financial institutions process 1.2 million transactions per second using real-time fraud detection systems. Major UK banks report 60% fewer fraudulent activities since implementing these solutions.

Key industry transformations include:

- Climate modelling achieving 700,000x faster hurricane prediction

- Autonomous vehicles processing 100TB of sensor data daily

- Streaming platforms generating £2.8bn annually through personalised recommendations

| Sector | Use Case | Impact |

|---|---|---|

| Healthcare | Medical imaging analysis | 89% faster diagnoses |

| Finance | Fraud pattern recognition | £4.7bn annual savings |

| Automotive | Self-driving navigation | 2.1m test miles completed |

| Environmental | Climate simulation | 5x faster carbon reduction strategies |

Over 40,000 enterprises worldwide employ these technologies, supported by 4 million developers creating sector-specific solutions. NVIDIA’s ecosystem alone powers 90% of machine learning frameworks used in commercial applications.

“What began as niche experimentation now underpins critical infrastructure. Our global community demonstrates daily how computational power solves humanity’s greatest challenges.”

Overcoming Operational Challenges with GPUs

Balancing cutting-edge performance with practical implementation remains critical for organisations adopting advanced computational solutions. While specialised hardware delivers unparalleled speed, operational demands require careful management of energy consumption and infrastructure costs.

Optimising Power Consumption

Modern systems consume substantial electricity during intensive computing workloads. NVIDIA’s TensorRT-LLM software demonstrates progress, achieving 8x faster inference alongside 5x lower energy use compared to conventional setups. This efficiency breakthrough helps enterprises meet sustainability targets without compromising performance.

Key strategies for high-performance computing environments include:

- Dynamic voltage scaling during lighter workloads

- Liquid-cooled server architectures

- Intelligent workload distribution across CPUs and accelerators

On-premises deployments face particular challenges. Managing multiple units demands robust cooling systems and upgraded power infrastructure – factors contributing to 40% higher operational costs in some configurations. Cloud-based solutions offer alternatives, with providers absorbing scalability complexities through shared storage resources.

The future lies in adaptive systems. Hybrid approaches combining energy-efficient hardware with smart workload allocation now enable processing large datasets responsibly. As UK data centres adopt these practices, the industry moves closer to sustainable computing paradigms without sacrificing computational might.