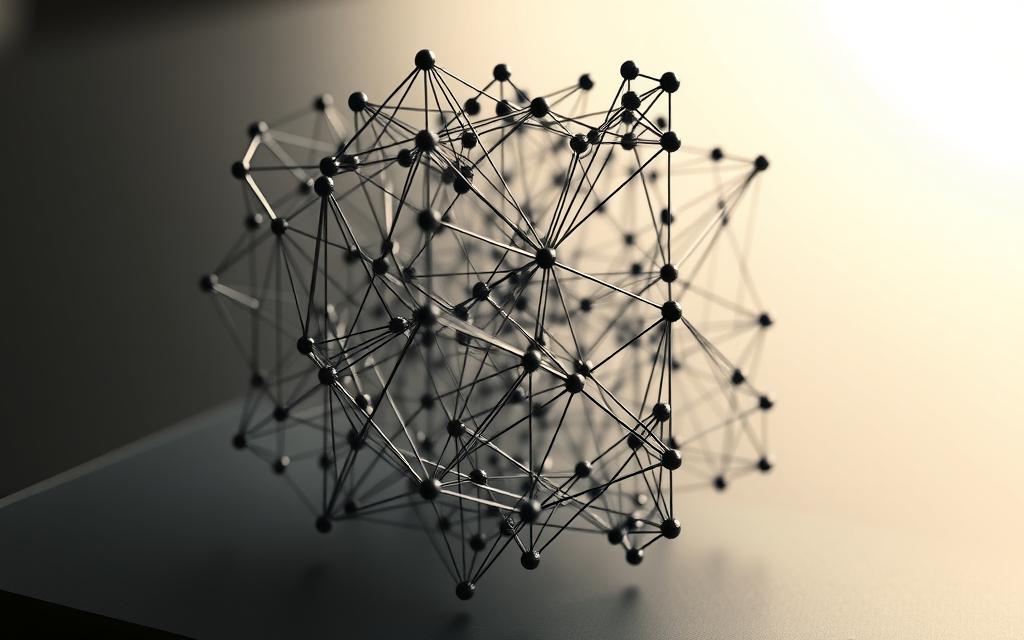

Modern computational systems owe much to artificial neural networks, algorithms designed to mimic biological brain functions. These structures excel at identifying intricate patterns within datasets, enabling machines to make predictions with remarkable accuracy. Inspired by the human nervous system, they’ve become central to solving complex challenges across industries.

Originally conceived to replicate biological processes, these computational models now drive advancements in machine learning. Their layered architecture processes information through interconnected nodes, simulating how neurons transmit signals. This approach differs fundamentally from rule-based programming, allowing systems to adapt through experience rather than rigid instructions.

The evolution from theoretical concept to practical tool began with early neuroscience research. Today’s implementations power innovations ranging from voice recognition to medical diagnostics. Organisations leverage their predictive capabilities to enhance decision-making processes and automate tasks once deemed impossible for machines.

Understanding these networks provides crucial insights into contemporary deep learning frameworks. Their ability to handle unstructured data makes them indispensable for developers tackling real-world AI challenges. Subsequent sections will explore their architecture, training methods, and transformative applications shaping our technological landscape.

Introduction to Artificial Neural Networks

Biological nervous systems laid the blueprint for computational breakthroughs. Early researchers observed how brain cells transmit signals through intricate networks, sparking ideas about replicating this process digitally. This cross-disciplinary approach bridged neuroscience and computer science, creating systems that evolve through experience.

From Biological Inspiration to Digital Models

Warren McCulloch and Walter Pitts revolutionised computing in 1943 by modelling the first artificial neural circuit. Their work demonstrated how simplified neuron models could perform logical operations. Four decades later, advancements in data processing transformed these concepts into practical tools for pattern recognition.

Biological synapses inspired weighted connections between artificial nodes. Like their organic counterparts, these digital neurons process inputs and fire outputs based on specific thresholds. This adaptive mechanism allows networks to refine their performance through repeated exposure to information.

Core Concepts and Key Terminology

Three fundamental components define these systems:

| Element | Biological Equivalent | Function |

|---|---|---|

| Neurons | Brain cells | Process and transmit signals |

| Weights | Synaptic strength | Determine signal importance |

| Activation patterns | Neural firing | Trigger output responses |

Modern implementations adjust connection weights automatically during training phases. This self-optimising capability enables networks to develop sophisticated representations of complex data relationships. Unlike traditional software, they prioritise pattern detection over predefined rules.

What is ann in deep learning

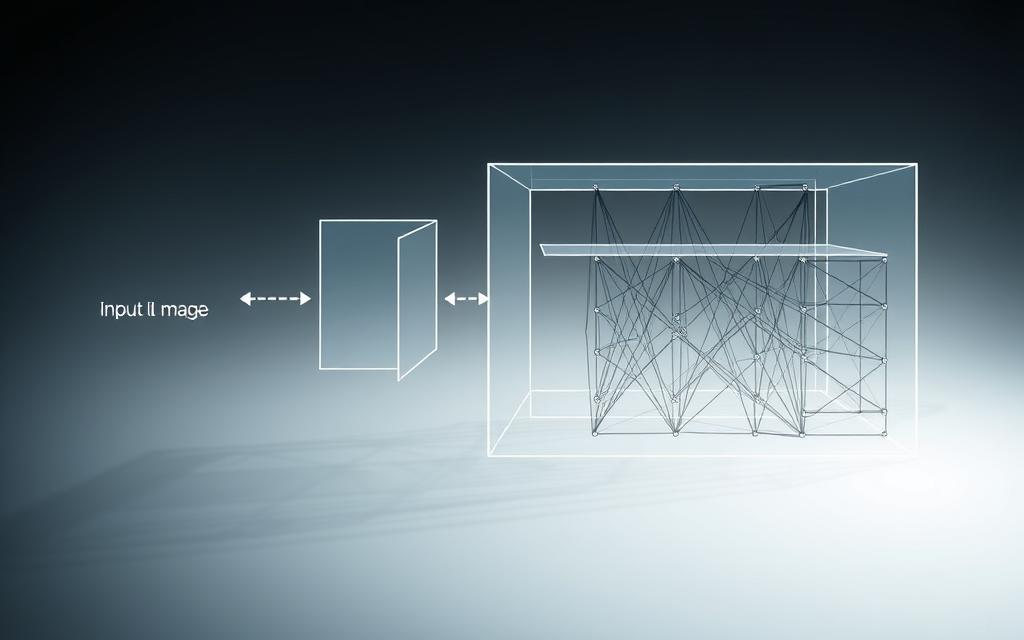

Contemporary AI systems rely on layered computational structures to interpret complex data patterns. These frameworks process information through interconnected nodes, adjusting connection weights through iterative training cycles. Unlike traditional machine learning approaches, they automatically refine their decision-making processes without explicit programming.

Multi-layered architectures distinguish deep learning systems from simpler models. Each layer extracts progressively abstract features, enabling machines to recognise faces or translate languages. This hierarchical processing mimics human cognitive functions more closely than single-layer alternatives.

| Aspect | Traditional ML | Layered Systems |

|---|---|---|

| Data Handling | Structured inputs | Raw, unstructured data |

| Learning Approach | Feature engineering | Automatic pattern detection |

| Complexity Management | Manual adjustments | Self-optimising weights |

Training mechanisms compare predictions against actual outcomes, adjusting parameters to minimise errors. This feedback loop allows systems to improve performance across diverse tasks – from medical imaging analysis to financial forecasting. Organisations increasingly adopt these models for their adaptability in dynamic environments.

The evolution of mathematical frameworks has enabled machines to process speech and visual information with human-like accuracy. Subsequent sections will detail how specific architectural choices enhance these capabilities across different industries.

Fundamental Architecture of ANNs

At the core of every neural network lies a meticulously organised framework of interconnected layers. This architecture determines how data flows through the system, transforming raw inputs into meaningful predictions. Three distinct components collaborate to achieve this transformation.

Understanding Input, Hidden, and Output Layers

The input layer acts as the network’s reception desk, receiving numerical representations of real-world data. Proper formatting here ensures efficient processing – temperatures might be scaled between 0-1, while text gets converted into vectors.

Multiple hidden layers form the computational engine. Each node applies mathematical operations to incoming signals, distilling patterns through weighted connections. Deeper configurations enable hierarchical learning – early layers detect edges in images, while subsequent ones recognise complex shapes.

| Layer Type | Key Responsibility |

|---|---|

| Input | Data reception & formatting |

| Hidden | Feature extraction & transformation |

| Output | Result generation |

Role of Activation Functions

These mathematical functions determine whether neurons fire signals onward. Without them, networks could only model linear relationships. The ReLU activation function (Rectified Linear Unit) has become popular for its computational efficiency in deep architectures.

Sigmoid and tanh functions remain useful for specific scenarios. Their S-shaped curves help compress values into predictable ranges, crucial for probability estimations in classification tasks. Choosing appropriate activation functions significantly impacts model performance across different applications.

Key Components and Functionality of Neural Networks

Neural networks achieve intelligent behaviour through carefully orchestrated interactions between computational elements. These systems process information using layered architectures where each component plays distinct roles in pattern recognition and decision-making.

Neurons act as fundamental processing units, receiving signals from connected nodes. They apply mathematical transformations to inputs before passing results to subsequent layers. This collaborative approach enables networks to identify subtle correlations within complex datasets.

Connection strengths between neurons are governed by adjustable parameters called weights. These numerical values determine how significantly one node’s output affects another’s activity. During training phases, algorithms modify weights to reduce discrepancies between predictions and actual outcomes.

| Component | Operational Role |

|---|---|

| Neurons | Process inputs using activation functions |

| Weights | Regulate signal transmission strength |

| Activation Function | Introduce non-linear decision boundaries |

Each neuron calculates a weighted sum of its inputs before applying an activation function. Popular choices like ReLU help networks model intricate relationships in visual or textual data. This mathematical foundation allows layered systems to approximate virtually any computable pattern.

Parallel processing across multiple pathways enables efficient handling of large-scale information. The collective behaviour of interconnected nodes often produces capabilities exceeding individual component performance. This emergent intelligence makes neural networks particularly effective for tasks requiring adaptive learning.

Modern implementations automatically adjust their internal parameters through exposure to training examples. This self-optimising quality allows the model to refine its understanding without explicit programming. Such flexibility drives applications ranging from predictive analytics to autonomous system control.

The Role of Backpropagation in ANN Learning

Machine intelligence systems refine their capabilities through iterative training processes. The backpropagation algorithm serves as the backbone of this improvement cycle, enabling neural networks to evolve by analysing prediction inaccuracies. This mechanism transforms static architectures into adaptive tools capable of self-correction.

Optimising Weights and Minimising Error

Connection strengths between artificial neurons undergo systematic adjustments during training. The algorithm calculates each weight’s contribution to overall errors using partial derivatives. Smaller modifications are applied to parameters causing minimal disruption, preserving valuable learned patterns.

| Aspect | Backpropagation | Gradient Descent |

|---|---|---|

| Primary Function | Error distribution | Parameter adjustment |

| Adjustment Method | Layer-wise calculations | Step-size optimisation |

| Error Handling | Backward propagation | Directional minimisation |

Introduction to Gradient Descent

This optimisation technique navigates complex mathematical landscapes to find optimal weight configurations. By measuring error gradients, it determines the steepest path towards improved prediction accuracy. The process resembles descending a mountainous terrain by always choosing the quickest downward slope.

Practical implementations combine both techniques for efficient machine learning. Together, they enable systems to adjust thousands of parameters simultaneously while maintaining computational stability. This synergy powers advancements in facial recognition software and adaptive translation tools.

Diverse ANN Types and Their Applications

Artificial intelligence systems employ specialised architectures tailored to distinct computational challenges. Five primary variants have emerged to handle tasks ranging from image analysis to sequential data processing. Each design optimises performance for specific data formats and problem types.

Feedforward Networks: Pattern Recognition Foundations

The simplest architecture processes information in one direction without feedback loops. These neural networks excel at classifying customer reviews or predicting housing prices. Their straightforward design makes them ideal for introductory machine learning projects.

Convolutional Architectures: Visual Data Specialists

These convolutional neural networks analyse grid-structured information through layered filters. Architects developed them specifically for interpreting medical scans and satellite imagery. Their unique structure detects edges, textures, and complex shapes through progressive abstraction.

Recurrent Systems: Sequential Data Processors

Memory-enabled designs handle time-series information and language patterns. Stock market forecasting and speech-to-text conversion benefit from their temporal awareness. Unlike feedforward models, they maintain context across sequential inputs through internal loops.

| Network Type | Specialisation | Common Applications |

|---|---|---|

| Feedforward | Static pattern recognition | Fraud detection, customer segmentation |

| Convolutional | Spatial data analysis | Facial recognition, autonomous vehicles |

| Recurrent | Temporal dependencies | Speech recognition, text generation |

| LSTM | Long-term sequence memory | Machine translation, weather prediction |

| GAN | Data generation | Art creation, drug discovery |

Advanced variants like Generative Adversarial Networks push boundaries in creative domains. These competing systems produce synthetic media indistinguishable from authentic samples. Developers choose architectures based on data characteristics and desired outcomes.

Practical Applications of Artificial Neural Networks in Industry

Industries worldwide harness artificial neural networks to solve complex challenges with unprecedented efficiency. These systems analyse vast datasets, uncovering patterns invisible to human analysts. Their adaptability fuels innovations across sectors, from healthcare to autonomous transportation.

Visual processing applications utilise convolutional architectures to interpret medical scans and satellite imagery. Radiologists employ these tools to detect tumours in X-rays with 97% accuracy, reducing diagnostic errors. Retailers apply similar technology to monitor stock levels through automated shelf scanning.

Natural language processing powers chatbots handling 73% of customer service queries in UK banks. Translation services leverage recurrent architectures to maintain context across lengthy documents. Content moderation systems automatically flag inappropriate language using speech recognition algorithms trained on millions of samples.

Financial institutions rely on neural networks to predict market trends and detect fraudulent transactions. One major bank reduced false fraud alerts by 40% through adaptive learning systems. Hedge funds employ these models to analyse global economic indicators in real time.

Autonomous vehicles process LiDAR and camera feeds through layered architectures, making split-second navigation decisions. Transport for London trials such systems to optimise traffic flow during peak hours. These technologies also enhance railway safety through predictive track maintenance.

Healthcare breakthroughs include early Parkinson’s detection through voice pattern analysis. Pharmaceutical companies accelerate drug discovery by simulating molecular interactions. “Neural architectures cut our research timeline by 18 months,” notes a leading UK biotech director.

Recommendation engines drive 35% of e-commerce revenues by analysing user behaviour patterns. Streaming services personalise content suggestions using collaborative filtering techniques. This machine learning approach boosts customer engagement while respecting privacy regulations.

Deep Learning and Machine Learning: A Comparative Insight

Understanding computational approaches requires distinguishing between machine learning and its advanced counterpart. Traditional methods focus on training algorithms to automate decisions using structured data, while layered systems handle raw inputs through hierarchical processing.

Deep learning specialises in analysing complex patterns through multi-tiered artificial neural networks. These architectures automatically extract features, eliminating manual engineering common in conventional machine learning. This autonomy makes them particularly effective for speech analysis and image recognition tasks.

Resource demands differ significantly between approaches. Layered models typically require extensive datasets and GPU acceleration for optimal performance. Simpler algorithms often deliver efficient results with smaller, structured data pools using standard processors.

Choosing between these approaches depends on project scope and available resources. Conventional methods excel in scenarios with limited computational power, while deep learning tackles high-dimensional challenges like genomic sequencing. Both strategies continue reshaping how organisations leverage data for strategic advantage.

FAQ

How do artificial neural networks mimic biological processes?

Inspired by the human brain’s neurons, artificial neural networks use interconnected layers to process data. These digital models simulate synaptic connections through weighted inputs and activation functions, enabling pattern recognition and decision-making.

What distinguishes deep neural networks from traditional models?

Deep neural networks incorporate multiple hidden layers, allowing complex feature extraction. Unlike shallow architectures, they excel in tasks like image classification or speech recognition by hierarchically refining data representations.

Why are activation functions critical in hidden layers?

Activation functions introduce nonlinear transformations, enabling networks to model intricate relationships. Tools like ReLU or sigmoid ensure outputs remain interpretable while preventing linear collapse across layers.

How does backpropagation adjust weights during training?

Backpropagation calculates gradients of the loss function relative to each weight. Using algorithms like gradient descent, it iteratively updates weights to minimise prediction errors, optimising the model’s accuracy.

Which industries leverage convolutional neural networks effectively?

Healthcare uses CNNs for medical imaging analysis, while automotive sectors apply them in autonomous vehicles. Retailers employ these networks for visual search tools, enhancing customer experiences through accurate object detection.

Can unsupervised learning algorithms operate without labelled data?

Yes. Unsupervised learning identifies patterns in unlabelled datasets, such as clustering customer behaviour or reducing dimensionality. Techniques like autoencoders or k-means uncover hidden structures without predefined outputs.

What role do weights play in prediction accuracy?

Weights determine the influence of input signals on a neuron’s output. Properly tuned weights enable accurate data mapping, while poorly calibrated ones introduce errors, necessitating rigorous training processes.

How do recurrent networks handle sequential data tasks?

Recurrent neural networks (RNNs) use loops to retain historical information, making them ideal for time-series forecasting or natural language processing. Architectures like LSTM mitigate vanishing gradients, preserving context over long sequences.

Are ANNs suitable for real-time speech recognition systems?

Absolutely. Models like Google’s WaveNet or Apple’s Siri utilise deep neural networks to process audio signals rapidly. These systems convert speech to text by analysing phonetic patterns and contextual cues in milliseconds.

What challenges arise when training multi-layered architectures?

Vanishing gradients, overfitting, and computational costs are common. Techniques like batch normalisation, dropout layers, and hardware acceleration (e.g., NVIDIA GPUs) address these issues, ensuring stable and efficient training.