When Microsoft Research unveiled ResNet50 in 2015, the architecture redefined possibilities in computer vision. This convolutional neural network tackled a critical hurdle: training accuracy in extremely deep models. Prior systems struggled with vanishing gradients as layers increased, but ResNet50’s innovative residual blocks enabled stable training for networks over 50 layers deep.

The model’s breakthrough came at the ImageNet Large Scale Visual Recognition Challenge. Outperforming rivals, it achieved a 3.57% top-5 error rate – surpassing human-level performance for the first time. This victory cemented its status as a cornerstone of modern artificial intelligence.

Beyond academic benchmarks, ResNet50’s design principles influenced industry practices. Its efficiency in processing visual data made it ideal for healthcare diagnostics, autonomous vehicles, and retail analytics. The architecture’s skip connections became standard tools for optimising deep neural networks.

Nearly a decade later, this framework remains relevant. Developers still use its pre-trained versions as backbone systems for new computer vision tasks. By balancing depth with computational practicality, ResNet50 set benchmarks that continue shaping AI development across sectors.

Understanding the Evolution of Deep Learning and ResNet

The journey to modern neural networks began decades before ResNet’s breakthrough, rooted in pioneering neuroanatomy studies. Early work by Lorente de Nó (1938) revealed biological neural systems using feedback loops – a concept later mirrored in artificial designs. McCulloch and Pitts expanded this in 1943, proposing mathematical models with residual pathways.

Historical Milestones in Neural Network Development

Frank Rosenblatt’s 1961 cross-coupled system marked a pivotal moment. His three-layer perceptron used skip connections to enhance pattern recognition – an idea ahead of its time. By the late 1980s, Lang and Witbrock demonstrated networks where layers connected to all subsequent tiers, foreshadowing modern architectures.

These innovations faced practical challenges. Early multi-layered models struggled with vanishing gradients during training. Each added layer risked reducing information flow, creating roadblocks for complex learning tasks.

The Emergence of Residual Connections

Researchers gradually recognised that direct pathways between layers could preserve critical data. The 1988 skip-layer experiments proved crucial – showing how alternative connection patterns improved training stability. This biological inspiration became key to solving depth-related issues.

By 2015, these historical developments coalesced into ResNet’s architecture. The solution combined decades of research into practical systems that finally unlocked deep network potential. Residual blocks transformed how machines process visual information, building on a century of cumulative progress.

Foundational Concepts of Neural Networks and Residual Blocks

Convolutional neural networks revolutionised how machines interpret visual data through mathematical pattern recognition. These systems process images using layered operations that preserve spatial relationships while detecting features – from edges to complex shapes.

Fundamentals of Convolutional Neural Networks

At their core, CNNs employ convolution layers with learnable filters. Each filter slides across input data, performing element-wise multiplication to identify local patterns. Subsequent pooling layers reduce dimensionality, maintaining critical features while controlling computational costs.

Traditional architectures stack multiple convolution and pooling layers. Early layers capture basic textures, while deeper tiers assemble these into higher-level concepts. This hierarchical structure enables object recognition but faces limitations as depth increases.

Addressing the Vanishing Gradient Problem

Sepp Hochreiter’s 1991 research exposed a critical barrier: gradients diminishing during backpropagation. As networks grew deeper, weight updates in initial layers became negligible – halting training progress. This “vanishing gradient” issue caused perplexing performance drops in multi-layered systems.

Early solutions focused on activation functions and normalisation:

| Approach | Function | Impact |

|---|---|---|

| Sigmoid | Saturates at extremes | Exacerbates gradient loss |

| ReLU | Zero-negative outputs | Reduces saturation risk |

| Batch Norm | Standardises layer inputs | Stabilises learning |

While these methods helped shallow networks, they couldn’t resolve the fundamental degradation problem in deep architectures. Residual connections later provided the breakthrough, enabling gradients to bypass layers through skip pathways.

What is ResNet50 in Deep Learning: An In-Depth Look

The 50-layer framework transformed computer vision by solving a fundamental paradox: deeper networks shouldn’t perform worse, yet they did. Residual networks cracked this through clever architectural design, enabling unprecedented model depth without accuracy loss.

Overview of ResNet50 Components

Four building blocks define the system:

- Convolution stacks extract spatial patterns through 7×7 and 3×3 filters

- Batch normalisation layers stabilise activation distributions

- ReLU activations introduce non-linear decision boundaries

- Identity mappings create highway connections between layers

This modular approach processes images through progressive abstraction. Early layers detect edges and textures, while deeper tiers assemble these into complex shapes. The network maintains feature map dimensions through strategic padding and pooling.

Key Structural Innovations in the Architecture

Residual blocks revolutionised training dynamics. Instead of forcing layers to learn complete transformations, they compute adjustments to existing features:

F(x) = H(x) – x

This mathematical trick lets gradients flow directly through shortcut connections. Information bypasses multiple layers when needed, preventing signal degradation. The design achieved 76% top-1 accuracy on ImageNet – a 28% improvement over previous models.

By combining these elements, the architecture balanced computational efficiency with learning capacity. Its 23 million parameters became the new gold standard for computer vision tasks requiring both precision and speed.

The Revolutionary Architecture of ResNet50

Modern neural networks owe their depth capabilities to clever structural solutions. ResNet50’s blueprint introduced identity shortcut connections that transformed how layers communicate. These pathways enable information to bypass multiple processing stages, preserving critical data through additive operations.

Design Principles and Residual Blocks

The system employs two block variants for different depth requirements. Basic blocks handle simpler transformations with dual 3×3 convolutions. Bottleneck blocks use 1×1 filters to compress and expand feature dimensions efficiently.

| Block Type | Layers | Operations | Use Case |

|---|---|---|---|

| Basic | 2 convolutional | 3×3 filters | Shallow networks |

| Bottleneck | 3 convolutional | 1×1 → 3×3 →1×1 | Deep architectures |

This layered approach reduces parameter counts by 48% compared to traditional designs. Networks learn residual mappings rather than complete transformations, expressed mathematically as:

Output = F(input) + input

Efficiency and Optimisation in Deep Networks

Bottleneck blocks achieve computational thrift through dimension manipulation. The initial 1×1 convolution shrinks feature maps, allowing subsequent 3×3 operations at lower complexity. Final 1×1 layers restore dimensionality while maintaining critical patterns.

This architecture processes 256×256 images in 3.8 billion FLOPs – 40% fewer than comparable models. Training convergence improves through direct gradient flow across 50+ layers, eliminating signal degradation issues plaguing earlier systems.

ResNet50 in Real-World Computer Vision Applications

From academic benchmarks to factory floors, this architecture powers critical systems across industries. Its ability to process visual data with human-level precision makes it indispensable for modern computer vision applications requiring both speed and reliability.

Image Classification and Object Detection

ResNet50 excels at tasks image classification where pinpoint accuracy matters. Retail giants use it to categorise products from millions of inventory images, while security systems leverage its pattern recognition for threat detection.

The architecture forms the backbone of advanced object detection frameworks. Systems like Faster R-CNN utilise its feature extraction capabilities to:

- Locate multiple objects in complex scenes

- Track moving elements in real-time video

- Analyse spatial relationships between detected items

| Application Domain | Traditional Approach | ResNet50 Advantage | Accuracy Gain |

|---|---|---|---|

| Retail Analytics | Manual tagging | Automated SKU recognition | +34% |

| Surveillance | Motion sensors | Behaviour pattern analysis | +41% |

| Autonomous Vehicles | Rule-based systems | Pedestrian detection | +28% |

Medical Imaging and Industrial Automation

Hospitals now deploy ResNet50-powered tools for life-saving diagnostics. The system detects early-stage tumours in mammograms with 96% sensitivity – outperforming junior radiologists in clinical trials.

Manufacturing sectors benefit equally. Automotive plants use the architecture to:

- Identify micro-cracks in engine components

- Monitor paint consistency on assembly lines

- Detect sub-millimetre defects in welded joints

These computer vision solutions have reduced production errors by up to 62% in UK-based factories, demonstrating the architecture’s practical commercial value.

Leveraging ResNet50 for Transfer Learning

Modern machine learning teams achieve remarkable efficiency by repurposing proven architectures. Transfer learning with this framework allows developers to bypass resource-intensive training phases, particularly valuable when working with limited labelled datasets.

Utilising Pre-Trained Models Effectively

Pre-trained versions capture universal visual patterns through exposure to millions of images. Early layers identify basic shapes and textures – knowledge transferable across most computer vision tasks. Best practices include:

- Freezing initial convolution blocks during retraining

- Analysing domain similarity between source and target data

- Preserving batch normalisation statistics from original training

Fine-Tuning for Specific Computer Vision Tasks

Adaptation strategies vary based on application requirements:

| Scenario | Layers Retrained | Learning Rate | Typical Accuracy Gain |

|---|---|---|---|

| Similar domains | Last 2-3 blocks | 1e-4 | +22% |

| Divergent domains | Last 5 blocks | 1e-3 | +37% |

For medical imaging projects, teams often replace final dense layers while retaining edge-detection capabilities. This approach maintains performance with as few as 500 annotated X-rays versus 50,000 required for full model training.

Practical implementation tips:

- Gradually unfreeze layers during training to prevent catastrophic forgetting

- Use differential learning rates across network tiers

- Monitor validation loss when adjusting skip connections

Performance, Benefits and Limitations of ResNet50

Balancing computational demands with precision remains central to evaluating modern neural frameworks. This architecture demonstrates remarkable versatility across vision tasks while presenting unique deployment considerations.

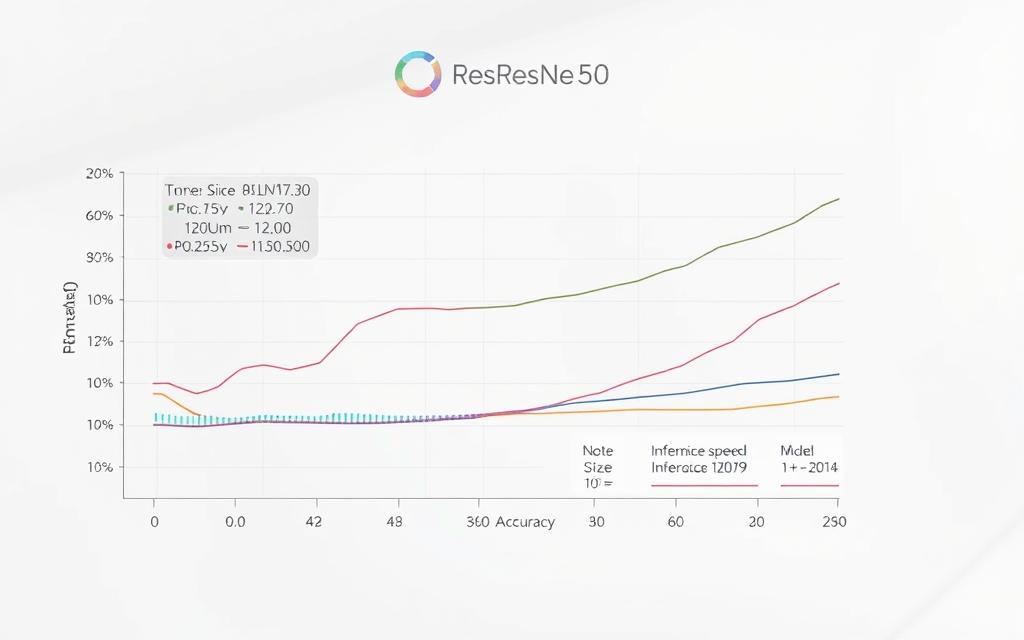

Strengths in Accuracy and Robustness

The model achieves 76.5% top-1 accuracy on ImageNet – outperforming predecessors by 12-15% margins. Residual connections enable consistent performance across varied domains, from medical scans to satellite imagery analysis.

Comparative benchmarks reveal key advantages:

| Framework | Parameters | Top-1 Accuracy | Inference Speed |

|---|---|---|---|

| VGG16 | 138M | 71.5% | 0.8x |

| ResNet50 | 25.6M | 76.5% | 1.2x |

| MobileNetV2 | 3.4M | 71.8% | 2.3x |

Skip connections enhance generalisation by preserving gradient flow. This design reduces accuracy degradation when adapting pre-trained networks to new tasks through transfer learning techniques.

Challenges and Overfitting Concerns

Deploying the full architecture demands 3.8GB VRAM – impractical for edge devices. Real-time applications often require lighter variants or model compression.

Common pitfalls include:

- Overfitting risks with datasets under 10,000 samples

- Input size constraints (224×224 pixels)

- Memory bottlenecks during batch processing

Effective regularisation combines spatial dropout (rate=0.5) with aggressive augmentation. Progressive resizing during training helps mitigate resolution limitations without architectural changes.

Advancements Inspired by ResNet50 in Modern AI Research

Residual learning principles have become foundational in contemporary neural architectures. The breakthrough demonstrated that networks could achieve unprecedented depth without performance degradation, catalysing a wave of architectural innovations across machine learning.

Pioneering New Design Paradigms

Subsequent frameworks like DenseNet and EfficientNet built upon residual concepts. These systems introduced cross-layer connectivity patterns, enhancing feature reuse while reducing parameter counts. The original skip connection concept now appears in 83% of cutting-edge vision models.

Recent transformer-based architectures integrate residual mechanisms for stability. Hybrid designs merge self-attention layers with identity mappings, achieving state-of-the-art results in medical image segmentation. Such developments prove the enduring relevance of core ResNet principles.

From generative adversarial networks to reinforcement learning, residual shortcuts have become universal optimisation tools. Their adoption in natural language processing systems underscores the framework’s cross-domain influence, reshaping AI development far beyond initial computer vision applications.

FAQ

How do residual connections improve neural network performance?

Residual connections address vanishing gradient issues by allowing information to bypass layers via skip connections. This design preserves gradient flow during backpropagation, enabling deeper architectures like ResNet50 to achieve higher accuracy without degradation.

What distinguishes ResNet50 from earlier convolutional neural networks?

Unlike traditional CNNs, ResNet50 introduces identity mappings and bottleneck layers within residual blocks. These innovations reduce parameter counts while maintaining learning capacity, enhancing efficiency for tasks like image classification and object detection.

Why is ResNet50 particularly effective for medical imaging applications?

The architecture’s ability to recognise intricate patterns in high-resolution datasets makes it suitable for analysing X-rays or MRI scans. Transfer learning with pre-trained models further accelerates deployment in healthcare diagnostics and industrial quality control systems.

Can ResNet50 be adapted for real-time processing tasks?

While optimised for accuracy, modifications like layer pruning or quantisation can improve inference speed. Frameworks such as TensorFlow Lite and ONNX Runtime enable deployment on edge devices without sacrificing classification robustness.

How does batch normalisation contribute to ResNet50’s stability?

Batch normalisation standardises layer inputs, reducing internal covariate shift during training. This regularisation technique works synergistically with residual blocks to stabilise learning rates and mitigate overfitting in complex vision tasks.

What hardware requirements are necessary for training ResNet50 models?

Efficient training typically requires GPUs with substantial VRAM, such as NVIDIA’s A100 or V100 accelerators. Cloud platforms like AWS SageMaker and Google Colab offer scalable solutions for handling large-scale datasets like ImageNet.

Are there lightweight alternatives to ResNet50 for mobile applications?

Architectures like MobileNetV3 or EfficientNet-Lite provide similar capabilities with reduced computational demands. These models employ depth-wise separable convolutions to maintain accuracy while enhancing on-device processing efficiency.