Modern computing achieves remarkable feats by mirroring nature’s most complex creation: the human brain. Artificial neural networks form the backbone of contemporary machine learning, replicating biological neurons through interconnected nodes. These systems process information in layers, transforming raw data into meaningful outputs – much like cognitive reasoning.

Computer scientists developed these adaptive models to recognise patterns with uncanny accuracy. Unlike rigid programming, neural networks adjust their connections through exposure to information. This learning capability allows them to interpret images, translate languages, and predict trends – tasks once exclusive to human intelligence.

The breakthrough lies in mimicking synaptic interactions. Each artificial neuron activates when inputs reach specific thresholds, passing signals through weighted connections. Over time, these weights refine themselves, enabling systems to evolve their responses without manual intervention.

Today’s applications span healthcare diagnostics to financial forecasting, showcasing versatility rooted in biological inspiration. By approximating neural processes through mathematics, engineers create tools that learn from experience rather than follow predefined rules.

This foundation supports advanced developments like deep learning architectures. Understanding these principles unlocks insights into how machines now achieve feats once deemed uniquely human – from creative problem-solving to contextual decision-making.

Introduction to Neural Networks

Contemporary AI overcomes traditional limits using neuron-like structures that self-optimise through exposure to information. Unlike conventional code-bound systems, these adaptive networks rewrite their own rules – automating tasks ranging from fraud detection to medical diagnoses.

Their power stems from handling unstructured data at scale. Where humans struggle with billions of data points, neural networks identify hidden relationships through layered processing. This capability transforms raw inputs into organised clusters – think sorting customer feedback or grouping genetic markers.

Financial institutions now use these systems to detect transaction anomalies with 98% accuracy. “The shift from rigid algorithms to fluid, learning-based models represents the biggest leap since programmable computers,” notes a Cambridge AI researcher.

Key advantages include:

- Dynamic pattern recognition across messy datasets

- Continuous improvement without manual updates

- Cross-industry applications from voice assistants to stock predictions

By serving as intelligent filters between storage systems and decision-makers, neural networks turn noise into strategy. Their biological inspiration enables machines to develop human-like judgement – just faster and at enterprise scale.

What is a neural network and how does it work?

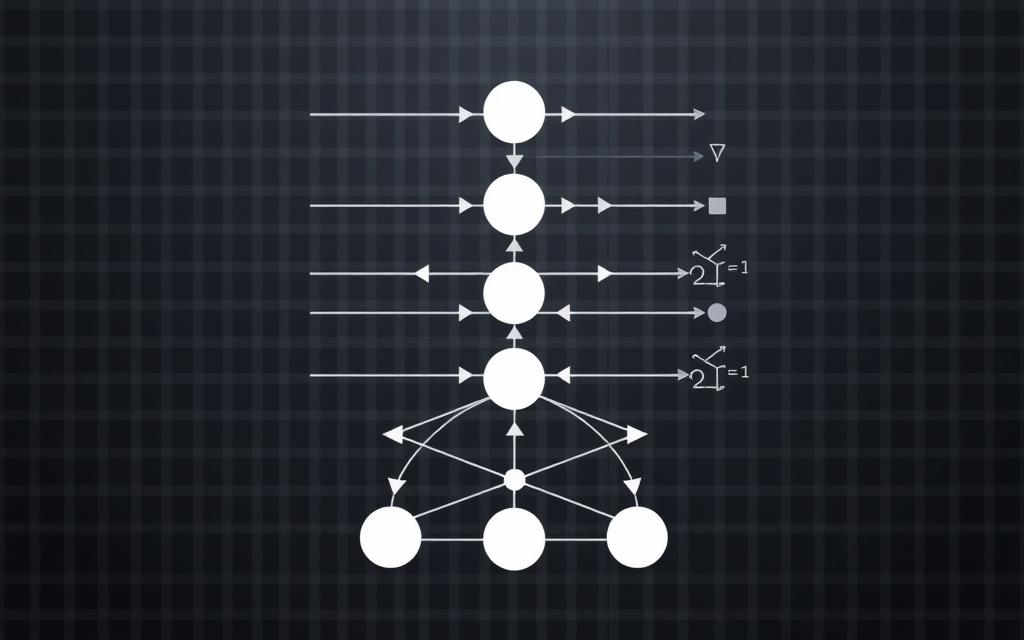

At their core, these adaptive systems process data through layered computational units. Raw information enters through an input layer, where individual nodes represent distinct data features. A handwritten digit recognition system, for instance, might use 784 input neurons – one for each pixel in a 28×28 image.

Three critical components drive operations:

- Weighted connections that amplify or suppress signals

- Activation functions determining neuron output

- Hidden layers extracting progressive abstractions

Each connection carries a numerical weight adjusted during learning phases. When recognising faces, heavier weights might prioritise eye spacing over background details. “The true magic happens in hidden layers,” explains DeepMind researcher Eleanor Whitmore. “These middle stages build hierarchical representations – edges become noses, noses become faces.”

Data undergoes transformation through successive layers:

- Input normalisation

- Feature detection

- Pattern aggregation

The final output layer converts processed signals into actionable results – whether classifying images or predicting energy consumption. Modern implementations achieve 95%+ accuracy in speech recognition through this layered refinement process.

Through continuous exposure to datasets, networks recalibrate their parameters autonomously. This self-optimisation enables applications from real-time language translation to predictive maintenance in manufacturing plants across Manchester to Bristol.

The History and Evolution of Neural Networks

The journey of neural networks began long before their current AI dominance. In 1958, psychologist Frank Rosenblatt developed the perceptron – a three-layered system mimicking basic decision-making. This early model could classify simple patterns, sparking both excitement and scepticism across academia.

By 1965, Soviet researchers Alexey Ivakhnenko and Lapa created the first functional deep learning algorithm. Their Group Method of Data Handling used polynomial activation functions, laying groundwork for modern architectures. Yet limited computing power and crude training methods stalled progress for decades.

Two AI winters froze development between 1970-2000. Early computers lacked the muscle to process complex networks. “We had revolutionary ideas trapped in stone-age hardware,” recalls MIT professor Marvin Minsky. The 1986 backpropagation algorithm finally provided efficient weight adjustment – but still required impractical processing time.

Three breakthroughs reignited the field:

- Graphics cards repurposed for parallel computations (2000s)

- Explosion of digital training data (2010s)

- Cloud computing enabling scalable resources

Today’s systems build upon these historical foundations. From Rosenblatt’s perceptron to GPT-4, persistent innovation transformed theoretical concepts into tools reshaping healthcare, finance and creative industries.

Inspiration from the Human Brain

Biological systems have long served as blueprints for technological innovation. Early computer scientists observed the human brain‘s ability to process information through interconnected neurons. This biological architecture inspired the first artificial neural networks, designed to replicate distributed communication between cells.

Biological neurons feature dendrites receiving signals, cell bodies processing inputs, and axons transmitting outputs. Similarly, computational nodes pass information through weighted connections. “We borrowed nature’s wiring diagram,” explains Dr Helena Cross, a Cambridge neuroscientist. “But modern systems prioritise mathematical efficiency over biological accuracy.”

Three key parallels emerged:

- Synaptic strength influencing signal transmission (modelled as adjustable weights)

- Layered processing transforming raw data into abstract concepts

- Adaptive learning through repeated exposure

Contemporary neural networks have evolved beyond direct brain mimicry. While early models aimed to mirror biological processes, today’s systems use optimised equations for specific tasks. The human brain remains a conceptual touchstone rather than an engineering template.

This distinction matters. Artificial networks lack consciousness or biological constraints. They excel at pattern recognition through mathematical adjustments, not conscious thought. Understanding this separation helps demystify AI while appreciating its biological roots.

Understanding Artificial Neural Networks

Modern industries harness mathematical models that transform raw data into strategic insights. At the heart of this revolution lie artificial neural networks – computational systems powering tools from ChatGPT to self-driving car algorithms. These universal approximators excel at mapping complex relationships between inputs and outputs, achieving what traditional programming cannot.

Large language models like Microsoft’s Bing and Meta’s Llama demonstrate this capability. By analysing billions of text examples, they learn linguistic patterns through layered neural networks. “The beauty lies in their adaptability,” notes Cambridge AI researcher Dr Imran Khan. “They don’t follow rules – they discover correlations through exposure.”

| Approach | Flexibility | Data Handling | Applications |

|---|---|---|---|

| Traditional Programming | Fixed logic | Structured inputs | Calculators, databases |

| Artificial Neural Networks | Self-adjusting | Unstructured data | Medical diagnosis, stock trading |

Three key strengths define these systems:

- Processing high-dimensional data like images or voice recordings

- Improving accuracy through iterative learning cycles

- Operating across industries without manual retraining

Financial institutions use artificial neural architectures to detect fraudulent transactions. Healthcare systems employ them for tumour identification in MRI scans. This versatility stems from their layered design – each level extracts more abstract features until patterns emerge.

As neural networks evolve, they redefine possibilities in machine intelligence. Their mathematical foundation enables continuous improvement, making them indispensable in our data-driven world.

Deep Learning and Neural Network Architectures

Deep learning revolutionises artificial intelligence through layered architectures that dissect data like digital onions. These deep neural networks employ multiple processing tiers, each extracting increasingly abstract patterns. Where traditional models see pixels, deep systems recognise shapes; where others hear sounds, they discern speech rhythms.

- Convolutional networks dissect images through spatial filters

- Recurrent models track sequences in language or stock prices

- Transformers process text using attention mechanisms

- Generative adversarial systems create synthetic media

- Fully connected networks handle general classification tasks

“Depth isn’t just layer count – it’s about hierarchical understanding,” explains DeepMind engineer Priya Sharma. Modern systems might use 100+ layers to analyse cancer scans, each tier isolating cells, then tumours, then malignancy indicators.

This architectural diversity enables specialised solutions. Financial institutions deploy deep learning models with temporal layers to predict market shifts. Streaming services use recommendation engines with embedding layers that map user preferences.

The magic lies in progressive abstraction. Early layers detect edges in photos, middle tiers assemble shapes, final stages identify objects. This mimics human cognition’s layered processing – but at scales biological brains can’t match.

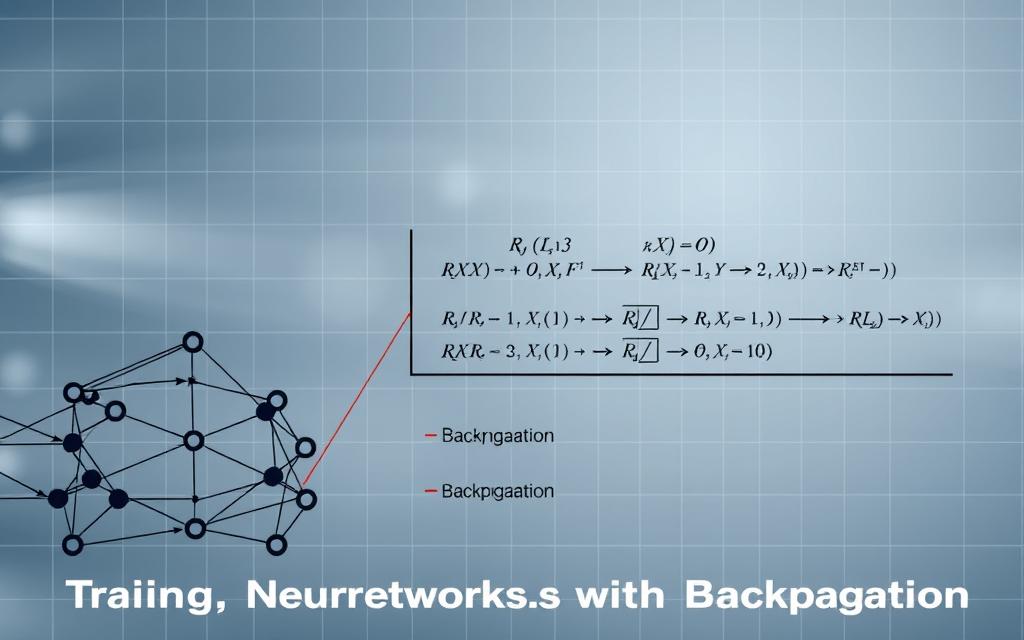

Training Neural Networks with Backpropagation

Teaching machines to learn requires precise adjustments, much like honing a craftsperson’s skills through practice. Backpropagation serves as the cornerstone of this educational process, enabling systems to refine their performance systematically. This algorithm compares predictions against actual outcomes, then works backwards to distribute responsibility for errors across network layers.

Understanding Backpropagation

The process operates through three phases: forward data flow, error calculation, and weight adjustments. During training, input signals travel through the network to generate outputs. Discrepancies between expected and actual results trigger error signals that propagate backwards. Each neuron’s contribution to the mistake determines how much its connections change.

Paul Werbos first applied this concept to neural networks in 1982, building on Seppo Linnainmaa’s 1970 mathematical framework. David Rumelhart’s team later popularised the technique, enabling multi-layered systems to learn complex patterns. Modern implementations use calculus’ chain rule to efficiently calculate adjustment gradients across thousands of parameters.

Overcoming the Vanishing Gradient

Sepp Hochreiter’s 1991 research exposed a critical flaw: error signals diminish exponentially in deep networks. Early layers received negligible feedback, stalling training progress. Contemporary solutions include:

- Rectified Linear Units (ReLUs) maintaining stronger gradients

- Batch normalisation stabilising layer inputs

- Skip connections bypassing problematic layers

These innovations allow systems like advanced language models to train effectively across 100+ layers. By preserving error information throughout the network, engineers achieve unprecedented accuracy in tasks from medical imaging to autonomous navigation.

The Role of Weights, Inputs, and Activation Functions

Adaptive learning systems rely on mathematical mechanisms to process information effectively. These components act as traffic controllers, determining which signals influence outcomes in computational models.

Fundamentals of Adjustable Parameters

Weights act as decision-making filters, multiplying incoming data to prioritise critical patterns. A +0.8 weight might amplify tumour indicators in medical scans, while -0.3 could suppress irrelevant background noise. Biases provide baseline activation thresholds, offering systems flexibility to learn non-linear relationships.

Inputs carry raw information – pixel values in images or word frequencies in texts. Through weighted combinations, networks transform these signals into actionable insights. This layered weighting process enables models to focus on relevant features while ignoring distractions.

Gatekeepers of Signal Progression

Activation functions decide whether processed inputs trigger neuron responses. The ReLU (Rectified Linear Unit), pioneered by Kunihiko Fukushima, became revolutionary for its simplicity: outputs equal inputs above zero, zero otherwise. This mimics biological neurons’ all-or-nothing firing mechanism.

Sigmoid and tanh functions handle probability estimations in classification tasks. Modern architectures often stack different activation types, creating sophisticated decision pathways. These mathematical gates enable networks to model complex phenomena – from stock market trends to protein folding patterns.

Together, these elements form dynamic ecosystems where weights evolve through experience, inputs fuel computations, and activation rules govern information flow. Their interplay powers systems that learn, adapt, and improve – reshaping industries from Edinburgh to Cardiff.

FAQ

How do artificial neural networks differ from biological ones?

Artificial neural networks simplify biological processes by using mathematical models. While biological neurons transmit electrochemical signals, artificial ones process data through weighted connections and activation functions like ReLU or sigmoid.

Why are deep neural networks preferred for complex tasks?

Deep architectures excel at hierarchical feature extraction. Multiple hidden layers enable learning intricate patterns in data, making them ideal for tasks like speech recognition or medical image analysis.

What challenges arise during training neural networks?

Common issues include overfitting, where models memorise training data, and vanishing gradients in deep networks. Techniques like dropout or batch normalisation address these problems effectively.

How does backpropagation improve model accuracy?

Backpropagation calculates error gradients across layers, adjusting weights via optimisation algorithms like Adam. This iterative process minimises prediction errors during training phases.

Which industries use neural networks most extensively?

Healthcare employs them for disease detection, while finance uses algorithmic trading models. Autonomous systems like Tesla’s Autopilot rely on convolutional networks for real-time decision-making.

Can neural networks replicate human creativity?

While tools like DALL-E generate novel images, they lack intentionality. Current systems recombine learned patterns rather than exhibit true creative reasoning.

What role do activation functions play in performance?

Functions like tanh introduce non-linearity, enabling networks to model complex relationships. Choices impact training speed and mitigate issues like gradient saturation in deep layers.

How do reinforcement learning systems utilise neural networks?

Agents like DeepMind’s AlphaGo use deep Q-networks to optimise decision-making through reward feedback. This approach excels in dynamic environments requiring sequential actions.

Are there ethical concerns with neural network applications?

Bias in training data can perpetuate discrimination, as seen in facial recognition controversies. Transparent model architectures and diverse datasets help mitigate these risks.

What hardware accelerates neural network training?

GPUs from NVIDIA or Google’s TPUs optimise parallel computations. Specialised chips reduce training times for large models like GPT-4 by handling matrix operations efficiently.