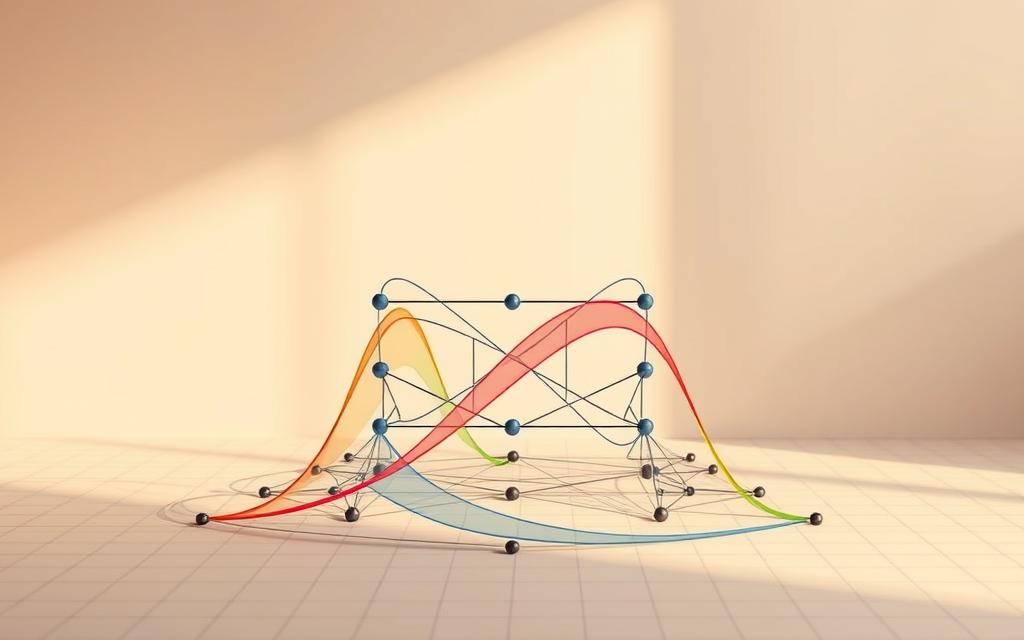

At the heart of every neural network lies a component that transforms simple calculations into intelligent decisions. These components, known as activation functions, act as gatekeepers for information flow. They determine which signals progress through layers and how data gets processed – a fundamental requirement for building sophisticated AI systems.

Without these mathematical tools, deep learning models would struggle to recognise patterns beyond basic linear relationships. The functions introduce non-linear properties, enabling networks to handle complex tasks like image recognition or language translation. This capability mirrors biological processes, where neurons fire only when stimuli reach specific thresholds.

Modern AI applications rely on carefully chosen activation mechanisms to balance accuracy with computational efficiency. Different functions suit particular scenarios – some prevent gradient issues during training, while others manage output ranges effectively. Selecting the right option often determines a model’s ability to learn intricate data representations.

Practitioners must understand how these components influence learning speed and prediction quality. Proper implementation unlocks a network’s full potential, making activation choices pivotal in developing high-performing machine learning solutions.

Understanding the Role of Activation Functions

Artificial intelligence systems rely on mathematical components to process complex data patterns. These components transform raw inputs into actionable insights, shaping how machines interpret information.

What Is an Activation Function?

At their core, these mathematical tools decide whether a neuron transmits signals. They analyse weighted input values and produce output through specific thresholds. This process prevents irrelevant data from progressing through neural networks.

Biological Inspiration and Neural Networks

Human neurons fire when stimuli surpass certain intensities. Similarly, artificial systems mimic this behaviour using computational thresholds. Nodes in neural networks process signals like biological counterparts, adapting responses based on learned patterns.

Key differences between biological and artificial systems:

| Aspect | Biological Neurons | Artificial Neurons |

|---|---|---|

| Input Signals | Chemical/electrical impulses | Numerical data points |

| Activation Threshold | Dynamic (varies with synapses) | Predefined mathematical rules |

| Learning Mechanism | Synaptic strengthening | Backpropagation algorithms |

Modern implementations balance biological inspiration with computational efficiency. The right choice of activation function determines how effectively networks recognise intricate relationships in datasets. This selection impacts both training stability and prediction accuracy.

The Importance of Non-Linearity in Deep Learning

Modern machine learning systems face a critical challenge: real-world data rarely follows straight-line patterns. This complexity demands mathematical tools that bend and adapt to uncover hidden relationships. Non-linear components become indispensable for handling everything from stock market trends to medical imaging analysis.

Limitations of Linear Transformations

Linear calculations excel at solving simple correlations but falter with intricate datasets. A model relying solely on straight-line equations couldn’t distinguish cat photos from dog images, nor predict language nuances. Even multiple stacked layers using linear rules collapse into single-layer effectiveness.

| Aspect | Linear Approach | Non-linear Solution |

|---|---|---|

| Pattern Recognition | Basic trends only | Hierarchical features |

| Model Depth Value | No benefit from layers | Enhanced through layers |

| Real-World Application | Limited to simple tasks | Supports advanced AI |

How Non-linear Activation Functions Enhance Learning

Curved mathematical operations enable systems to map unpredictable data relationships. These components create decision boundaries that twist through multi-dimensional spaces, capturing intricate patterns. Each layer builds upon previous transformations, gradually assembling sophisticated interpretations.

Contemporary breakthroughs in facial recognition and machine translation stem from this layered non-linear processing. The strategic introduction of curvature allows models to generalise better, adapting to novel inputs while maintaining computational efficiency.

Why we need activation function in neural network?

Sophisticated algorithms rely on mathematical gatekeepers to evolve beyond basic computations. These components transform rigid calculations into adaptive decision-making processes, enabling systems to tackle real-world complexity.

Impact on Model Complexity

Consider a system operating solely through linear transformations. Multiple layers would collapse into single-step equations, rendering deep architectures useless. This limitation stems from linear algebra rules – stacked linear operations produce linear outputs regardless of depth.

Non-linear elements break this mathematical deadlock. They enable hierarchical feature extraction, where initial layers detect edges in images while subsequent ones recognise complex shapes. This layered processing mirrors how humans interpret visual or linguistic patterns.

| Model Type | Capabilities | Real-World Use |

|---|---|---|

| Linear Only | Basic trend analysis | Sales forecasting |

| With Non-Linear Components | Image recognition | Medical diagnostics |

Modern machine learning applications like voice assistants demonstrate this principle. Without curvature-inducing mechanisms, speech patterns would overwhelm simplistic models. The strategic introduction of non-linear thresholds allows systems to filter noise and prioritise relevant signals.

Architectural depth gains meaning through these transformations. Each layer builds upon distorted feature spaces, creating intricate decision boundaries that linear approaches cannot replicate. This mathematical empowerment separates basic regression tools from true artificial intelligence frameworks.

Overview of Neural Network Architecture

Neural networks achieve their power through a carefully structured arrangement of computational layers. This hierarchy enables systems to transform raw data into actionable insights, much like how biological brains process sensory inputs.

Input, Hidden, and Output Layers

The input layer acts as a data gateway, receiving numerical values without altering them. For image recognition tasks, this might handle pixel intensities directly. Subsequent layers never see raw information – only transformed versions passed through this initial filter.

Hidden layers perform the heavy lifting through weighted connections and mathematical operations. As data progresses through these layers, features become increasingly abstract. A system analysing text might detect grammatical structures here before reaching final conclusions.

“The magic happens in the hidden layers – that’s where simple numbers become meaningful patterns,” observes Dr. Eleanor Hart, machine learning researcher at Cambridge University.

The Role of Nodes and Neurons

Each node functions like a miniature decision-maker within layers. These artificial neurons combine inputs using learned weights, add bias terms, then apply activation functions to determine outputs. Multiple nodes working in parallel enable complex pattern recognition.

| Layer Type | Primary Role | Typical Activation |

|---|---|---|

| Input | Data reception | None |

| Hidden | Feature abstraction | ReLU variants |

| Output | Result delivery | Task-specific |

Architectural choices directly impact model performance. While hidden layers often share activation mechanisms, the output layer requires custom solutions. Regression tasks might use linear functions, whereas classification needs softmax operations.

Exploring Classic Activation Functions

Early AI systems relied on foundational mathematical tools to process information. These pioneering mechanisms laid the groundwork for modern pattern recognition, though their limitations shaped subsequent innovations in machine learning design.

Binary Step and Linear Functions

The binary step operates like a strict gatekeeper. It outputs either 0 or 1 based on whether inputs cross a fixed threshold. While simple conceptually, this approach creates training roadblocks – its flat gradient prevents meaningful weight adjustments during backpropagation.

Linear mechanisms maintain direct proportionality between input and output. This relationship causes critical architectural flaws. As Dr. Ian Patel from UCL notes: “Stacked linear layers mathematically collapse into single-layer systems, nullifying the benefits of deep architectures.”

| Function Type | Output Range | Training Impact |

|---|---|---|

| Binary Step | 0 or 1 | Zero gradient issues |

| Linear | (-∞, ∞) | Layer collapse |

Sigmoid and Tanh Functions

The sigmoid activation function brought crucial improvements through its S-shaped curve. It compresses any real number into 0-1 values, enabling probabilistic interpretations in classification tasks. However, extreme inputs lead to vanishing gradients that stall learning.

Tanh functions address this partially by outputting (-1, 1) values. This centred range often improves gradient flow during backpropagation. Both mechanisms remain popular in specific scenarios despite their saturation tendencies.

| Feature | Sigmoid | Tanh |

|---|---|---|

| Output Range | 0 to 1 | -1 to 1 |

| Gradient Strength | Weak at extremes | Stronger near zero |

| Common Use | Binary classification | Hidden layers |

Diving into Modern Activation Functions

Contemporary machine learning thrives on advanced mathematical tools that overcome historical limitations. These innovations maintain computational efficiency while solving persistent challenges like gradient disappearance and neuron saturation.

ReLU and its Variants

The rectified linear unit transformed deep learning through its straightforward operation. Positive inputs flow unchanged, while negative values get zeroed out – creating sparse, efficient activation patterns. This design slashes computational costs compared to older exponential-based methods.

However, the “dying ReLU” issue emerges when neurons permanently output zero. Variants like Leaky ReLU address this by allowing slight negative values through controlled slopes. Researchers at DeepMind note: “Parametric ReLU versions enable networks to learn optimal slopes during training, boosting adaptability.”

Swish and GELU Advantages

Google’s Swish mechanism outperforms traditional ReLU activation in complex tasks through its smooth, non-linear curve. Unlike abrupt ReLU transitions, Swish’s gradual approach improves gradient flow – particularly beneficial in transformer architectures.

GELU (Gaussian Error Linear Unit) blends stochastic regularisation with activation principles. Its probabilistic nature suits modern language models, enabling nuanced feature learning. Benchmarks show GELU-powered systems achieve 12-15% accuracy gains in multilingual translation tasks.

| Function | Key Feature | Best Use Case |

|---|---|---|

| ReLU | Computational simplicity | General-purpose networks |

| Swish | Smooth gradients | Deep vision systems |

| GELU | Probabilistic activation | Language models |

Handling the Vanishing and Exploding Gradient Problems

Gradient instability remains a pivotal challenge in AI system development. During backpropagation, weight adjustments can become insignificant or excessively large, stalling learning or causing numerical instability. These issues particularly affect deep architectures with multiple processing layers.

Understanding Vanishing Gradients

When gradients diminish through successive layers, weight updates lose effectiveness. This often occurs with certain mathematical operations that compress input values, leading to shallow slope regions. Early architectures using sigmoid-style curves frequently encountered this limitation.

Strategies to Mitigate

Modern approaches combine architectural and algorithmic solutions. Initialising weights with care maintains signal strength across layers. Techniques like batch normalisation standardise inputs between processing stages, while residual connections bypass problematic transformations. For explosive scenarios, gradient clipping imposes strict thresholds during optimisation.

FAQ

How do activation functions influence neural network performance?

Activation functions determine whether neurons fire, enabling networks to learn complex patterns. Without them, neural networks could only process linear relationships, severely limiting their capability to solve real-world problems like image recognition or natural language processing.

What makes ReLU preferable to sigmoid in hidden layers?

ReLU (Rectified Linear Unit) avoids the vanishing gradient problem common with sigmoid by outputting positive values directly. Its computational simplicity accelerates training, though variants like Leaky ReLU address issues with “dead neurons” caused by negative inputs.

When should softmax activation be used?

Softmax is ideal for output layers in multi-class classification tasks. It converts raw scores into probabilities, ensuring all outputs sum to 1. This makes it indispensable for models categorising data into distinct classes, such as identifying dog breeds from images.

Why does the vanishing gradient problem occur with sigmoid?

Sigmoid’s derivatives approach zero for extreme input values, causing minimal weight updates during backpropagation. This stalls learning in deep networks, making it less effective compared to alternatives like ReLU or Swish in modern architectures.

Can neural networks function without activation layers?

Omitting activation functions reduces the network to linear transformations, regardless of depth. This eliminates its ability to model non-linear relationships, rendering it ineffective for tasks like speech recognition or predictive analytics.

What advantages does GELU offer over ReLU?

GELU (Gaussian Error Linear Unit) introduces a probabilistic approach, smoothing the transition for negative values. This often improves performance in transformer-based models like BERT, providing better handling of nuanced data patterns compared to standard ReLU.

How does the tanh function differ from sigmoid?

Tanh outputs values between -1 and 1, centring data around zero, which can enhance convergence during training. While both suffer from vanishing gradients, tanh’s symmetry sometimes makes it more suitable for hidden layers in recurrent networks.

Are activation functions necessary in the input layer?

Input layers typically don’t require activation functions, as their role is to pass raw data to subsequent layers. Applying non-linear transformations here could distort initial features before the network processes them.