The journey from basic computational systems to advanced artificial intelligence architectures spans decades. Warren McCulloch and Walter Pitts first proposed neural networks in 1944, creating frameworks inspired by biological brain functions. These early systems relied on single-layer designs – simple structures limited by the technology of their time.

Modern advancements transformed this landscape. The deep learning revolution, driven by graphics processing units (GPUs) from the gaming industry, enabled networks to expand from one or two layers to architectures with 50+ layers. This depth – the defining feature of deep learning – allows machines to process data through intricate hierarchical transformations.

But when does this shift occur? Traditional machine learning models use shallow networks for pattern recognition. The transition happens when multiple hidden layers create abstract representations of data, enabling autonomous feature extraction. This complexity unlocks capabilities like real-time image analysis and natural language processing.

Today’s systems owe their success to both historical foundations and modern engineering. The fusion of theoretical concepts with GPU-powered processing has redefined what neural networks can achieve – marking not just added layers, but a fundamental leap in computational intelligence.

Overview of Neural Networks and Deep Learning

Artificial intelligence research has witnessed dramatic pendulum swings in its approach to computational systems. From early theoretical models to today’s sophisticated frameworks, the development of neural networks reveals a story of innovation, abandonment, and rediscovery.

Historical Evolution and Revival

First conceptualised in 1944 by Warren McCulloch and Walter Pitts, neural networks initially struggled with technological limitations. The 1980s saw renewed interest as researchers developed multi-layer architectures, only to face another downturn during early 2000s “AI winters”.

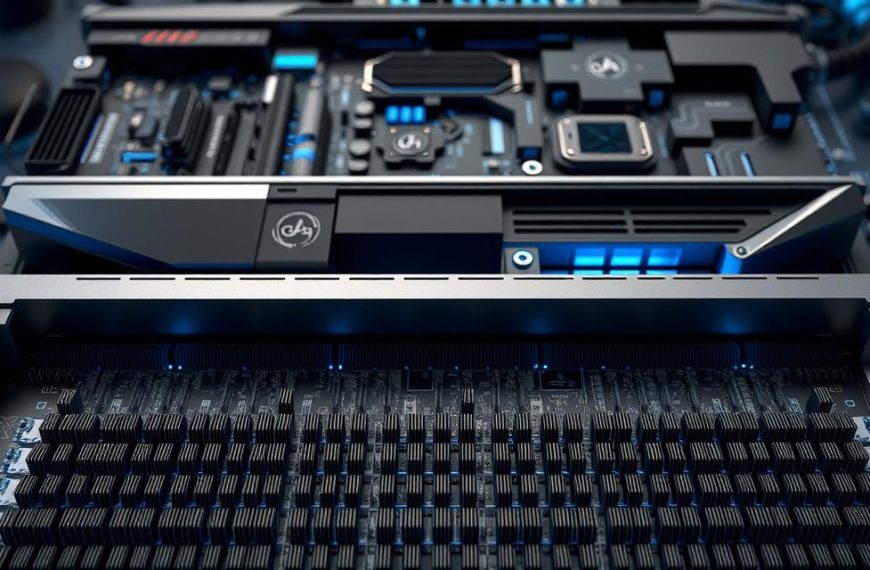

Modern resurgence emerged through an unlikely ally: gaming hardware. Graphics processing units (GPUs) provided the computational muscle needed to train complex systems, enabling layered structures that process data with unprecedented depth.

Fundamentals of Neural Processing

Contemporary systems rely on interconnected nodes arranged in layers. These feed-forward architectures:

- Process information sequentially from input to output

- Begin with randomised weights adjusted during training

- Refine their parameters through exposure to labelled examples

| Aspect | Traditional Machine Learning | Deep Learning |

|---|---|---|

| Layers | 1-2 hidden layers | 50+ hidden layers |

| Feature Extraction | Manual engineering | Autonomous learning |

| Data Requirements | Moderate datasets | Massive datasets |

| Compute Power | CPU-based | GPU-accelerated |

This evolution from manual feature design to autonomous pattern recognition marks the critical shift in machine learning methodologies. Modern architectures now handle tasks ranging from speech recognition to medical diagnostics through hierarchical processing of raw inputs.

When Does a Neural Network Become a Deep Learning Model

The distinction between standard machine techniques and their advanced counterparts lies in structural complexity. While traditional approaches use limited architectures, deep neural networks introduce hierarchical processing through layered designs. This progression enables autonomous feature discovery rather than manual engineering.

Exploring Layer Depth and Complexity

Credit Assignment Path (CAP) depth determines a system’s sophistication. CAP measures transformations between input and output nodes. Deep learning typically begins when this path exceeds two layers, enabling multi-stage abstraction.

Consider image recognition systems. Initial layers might detect edges, while subsequent ones assemble shapes. Each tier builds upon prior interpretations, creating refined representations. This layered approach allows:

- Progressive feature extraction

- Reduced manual intervention

- Enhanced pattern recognition

The Role of Training Data and Output Layers

Deeper architectures demand substantial training data. Shallow models might function with thousands of examples, while deep neural networks often require millions. The final output layer synthesises accumulated insights into actionable results.

| Factor | Shallow Networks | Deep Networks |

|---|---|---|

| Typical Layers | 1-3 | 10-100+ |

| Data Sensitivity | Low | Extreme |

| Feature Handling | Manual | Automatic |

| Compute Needs | Basic | Specialised |

Modern implementations prioritise depth over breadth. This shift enables handling raw inputs directly, bypassing tedious preprocessing. The transition occurs when layered structures independently develop meaningful data hierarchies.

The Evolution from Machine Learning to Deep Learning

A seismic shift occurred when gaming hardware met mathematical ingenuity. What began as graphical processing solutions for computer games inadvertently laid the groundwork for modern deep learning systems. This unexpected synergy transformed limited machine learning approaches into layered computational powerhouses.

Influence of GPU Advances

The gaming industry’s demand for realistic visuals birthed graphics processing units (GPUs) with thousands of cores. Researchers repurposed these chips to train neural networks exponentially faster than traditional CPUs. Where 1980s systems struggled with three layers, modern GPUs now handle architectures exceeding 50 tiers.

Algorithmic Innovations and Complexity

Hardware alone couldn’t drive progress. Breakthroughs in training methods like backpropagation and gradient descent optimisation enabled practical multi-layer networks. As data complexity grew, older models like support vector machines – prized for mathematical elegance – faltered against raw processing demands.

| Aspect | 2000s Systems | Modern Systems |

|---|---|---|

| Core Technology | CPU-based | GPU-accelerated |

| Typical Layers | 1-3 | 50+ |

| Data Handling | Manual features | Autonomous learning |

This dual progression – silicon muscle meeting smart learning algorithms – redefined artificial intelligence capabilities. By 2010, deep learning had emerged as the dominant paradigm for complex pattern recognition tasks.

Key Deep Learning Components and Architectures

Architectural diversity in artificial intelligence systems enables targeted problem-solving approaches. Specialised designs process distinct data types – from pixel grids to language sequences – through optimised pathways.

Convolutional, Recurrent and Generative Networks

Convolutional neural networks (CNNs) dominate visual processing. Their layered structure extracts spatial hierarchies:

- Initial layers identify edges and textures

- Middle tiers assemble shapes

- Final layers recognise complete objects

Recurrent neural networks (RNNs) handle sequential data through memory loops. These architectures excel at:

- Language translation

- Time-series forecasting

- Speech recognition

Generative adversarial networks (GANs) employ dual networks. One generates synthetic data while the other evaluates authenticity, refining outputs through competition.

| Architecture | Primary Use | Key Feature |

|---|---|---|

| CNN | Image analysis | Spatial hierarchy extraction |

| RNN | Sequence processing | Memory retention |

| GAN | Data generation | Adversarial training |

Role of Hidden Layers and Nodes

Intermediate tiers transform raw inputs into abstract representations. Each layer prioritises different features:

- Early layers detect basic patterns

- Middle layers combine elements

- Final layers make high-level decisions

Individual nodes function as micro-processors. They apply mathematical operations to weighted inputs, activating when specific thresholds are met.

| Component | Function | Impact |

|---|---|---|

| Hidden Layers | Feature abstraction | Determines model depth |

| Nodes | Pattern detection | Influences processing capacity |

Applications and Use Cases in Modern AI

Contemporary artificial intelligence systems demonstrate their prowess through practical implementations that shape daily digital interactions. From voice-activated assistants to medical diagnostics, deep learning architectures drive innovations once considered science fiction. These technologies excel at parsing unstructured data – a capability revolutionising how machines interpret human inputs.

Speech Recognition and Natural Language Processing

Smart speakers like Amazon’s Alexa utilise multi-layered architectures to interpret voice commands. Google’s translation services now achieve near-human accuracy by analysing sentence structures across 100+ languages. Key advancements include:

- Context-aware responses in chatbots

- Real-time transcription for video content

- Sentiment analysis in customer feedback systems

These natural language processing breakthroughs rely on hierarchical pattern recognition. Systems dissect linguistic elements from phonemes to semantic relationships, enabling nuanced communication.

Image Recognition and Object Detection

Facebook’s photo tagging algorithms exemplify deep learning in visual analysis. Autonomous vehicles employ similar technology to identify pedestrians and traffic signs. Modern implementations feature:

- Medical imaging diagnostics with 95%+ accuracy rates

- Retail inventory management through shelf monitoring

- Agricultural crop health assessment via drone footage

These systems process pixel data through successive abstraction layers. Early tiers detect edges and textures, while deeper layers reconstruct complete objects within complex scenes.

Bridging Theory and Practice in Deep Learning

Understanding advanced computational systems requires closing the gap between mathematical concepts and real-world implementation. While deep learning achieves remarkable results, its decision-making processes often resemble enigmatic puzzles. Researchers face dual challenges: making layered architectures interpretable while ensuring they remain efficient for practical tasks.

Interpreting Neural Network Decisions

Modern analysis tools now reveal how layered systems process data. Techniques like activation mapping show which input elements influence specific outputs. These methods help explain why an image classifier recognises cats or how language models predict sentence structures.

Optimisation Techniques and Model Practicality

Engineers balance accuracy with resource demands through architectural pruning and precision reduction. Strategies include:

- Removing redundant nodes without performance loss

- Compressing models for mobile deployment

- Automating feature selection across network layers

These advances transform theoretical constructs into deployable solutions. By marrying interpretability with efficiency, deep learning systems evolve from academic curiosities to indispensable tools across industries.